The analysis focuses on evaluating the impact of Double Zero on Solana’s performance across three layers — gossip latency, block propagation, and block rewards.

shred_insert_is_full logs, block arrival times through DZ were initially delayed compared to the public Internet, but the gap narrowed over time.DoubleZero (DZ), is a purpose-built network underlay for distributed systems that routes consensus-critical traffic over a coordinated fiber backbone rather than the public Internet. The project positions itself as a routing, and policy controls tailored to validators and other latency-sensitive nodes. In practical terms, it provides operators with tunneled connectivity and a separate addressing plane, aiming to cut latency and jitter, smooth bandwidth, and harden nodes against volumetric noise and targeted attacks.

The design goal is straightforward, albeit ambitious: move the heaviest, most timing-sensitive parts of blockchain networking onto dedicated fiber-optic and subsea cables. In DoubleZero’s framing, that means prioritizing block propagation and transaction ingress, reducing cross-continent tail latency, and stripping malformed or spammy traffic before it hits validator sockets.

Solana’s throughput is gated as much by network effects as by execution, so any reduction in leader-to-replica and replica-to-leader propagation delays can translate into higher usable TPS. Overall, DoubleZero expects real-world performance on mainnet to improve substantially as the network is adopted by the validator cluster. Reducing latency by up to 100ms (including zero jitter) along some routes, and increasing bandwidth available to the average Solana validator tenfold.

In this article, we evaluate the effects of DoubleZero on the Solana network by comparing its performance with that of the public Internet. We measure how the use of DZ influences network latency, throughput, and block propagation efficiency, and we assess if these network-level changes translate into differences in slot extracted value.

Solana validators natively report gossip-layer round-trip time (RTT) through periodic ping–pong messages exchanged among peers. These measurements are logged automatically by the validator process and provide a direct view of peer-to-peer latency at the protocol level, as implemented in the Agave codebase.

To assess the impact of DoubleZero, we analyzed these validator logs rather than introducing any external instrumentation. Two machines with identical hardware and network conditions, co-located were used. We refer to them as Identity 1 and Identity 2 throughout the analysis.

In addition to gossip-layer RTT, we also examined block propagation time, which represents the delay between when a block is produced by the leader and when it becomes fully available to a replica. For this, we relied on the shred_insert_is_full log event emitted by the validator whenever all shreds of a block have been received and the block can be reconstructed locally. This event provides a precise and consistent timestamp for block arrival across validators, enabling a direct comparison of propagation delays between the two identities.

We chose shred_insert_is_full instead of the more common bank_frozen event because the latter only helps in defining the jitter. A detailed discussion of this choice is provided in the Appendix.

The experiment was conducted across seven time windows:

This alternating setup enables us to disentangle two sources of variation: intrinsic differences due to hardware, time-of-day, and network conditions, and the specific effects introduced by routing one validator through DZ. The resulting RTT distributions offer a direct measure of how DoubleZero influences gossip-layer latency within the Solana network.

This section examines how Double Zero influences the Solana network at three complementary levels: peer-to-peer latency, block propagation, and validator block rewards. We first analyze changes in gossip-layer RTT to quantify DZ’s direct impact on communication efficiency between validators. We then study block propagation times derived from the shred_insert_is_full event to assess how modified RTTs affects block dissemination. Finally, we investigate fee distributions to determine whether DZ yield measurable differences in extracted value (EV) across slots. Together, these analyses connect the network-level effects of DZ to their observable consequences on Solana’s operational and economic performance.

The gossip layer in Solana functions as the control plane: it handles peer discovery, contact-information exchange, status updates (e.g., ledger height, node health) and certain metadata needed for the data plane to operate efficiently. Thus, by monitoring RTT in the gossip layer we are capturing a meaningful proxy of end-to-end peer connectivity, latency variability, and the general health of validator interconnectivity. Since the effectiveness of the data plane (block propagation, transaction forwarding) depends fundamentally on the control-plane’s ability to keep peers informed and connected, any reduction in gossip-layer latency can plausibly contribute to faster, more deterministic propagation of blocks or shreds.

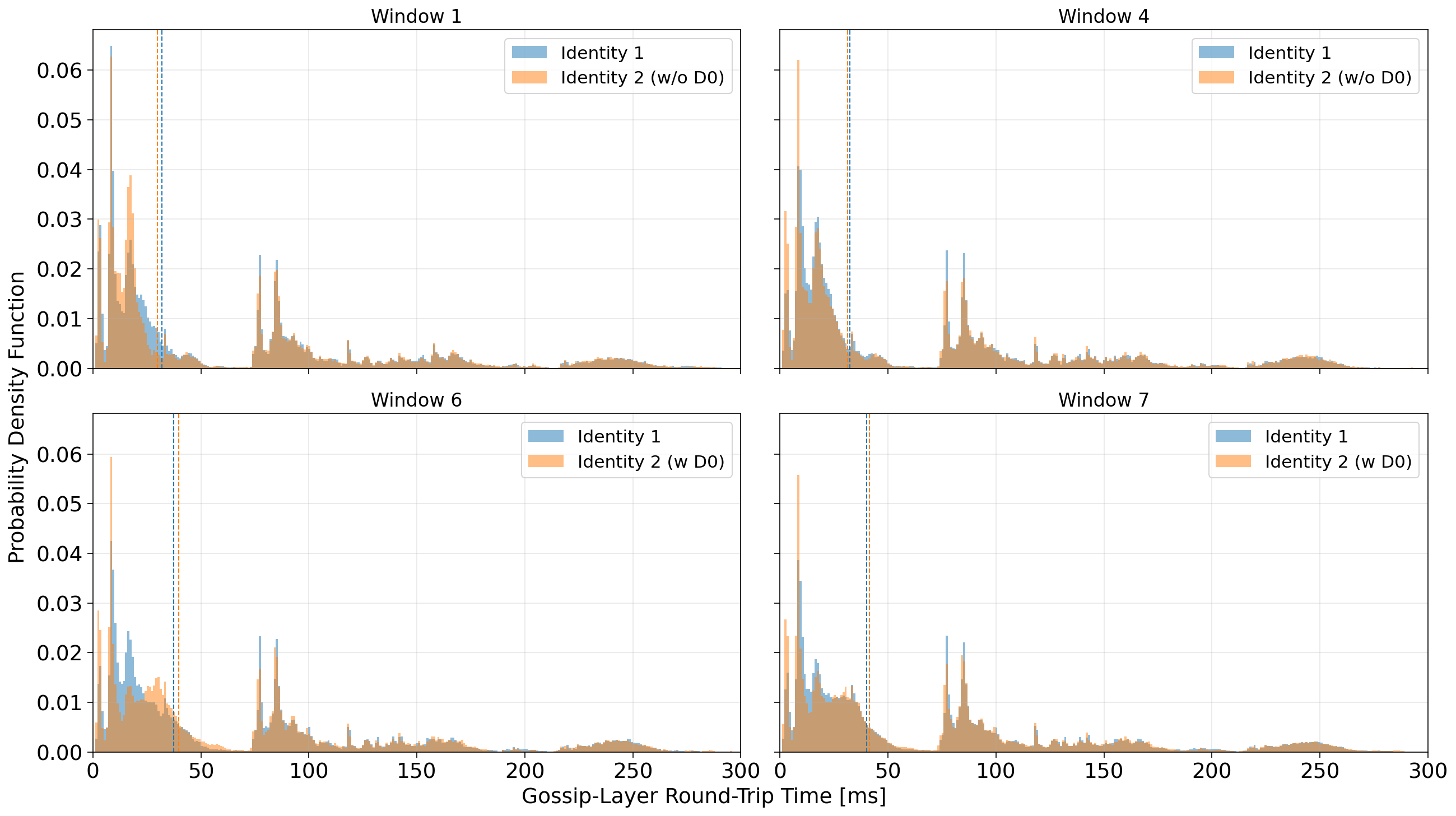

In Figure 1, we present the empirical probability density functions (PDFs) of gossip-layer round-trip time (RTT) from our two identities under different experimental windows.

Across all windows, the RTT distributions are multimodal, which is expected in Solana’s gossip layer given its geographically diverse network topology. The dominant mode below 20–30 ms likely corresponds to peers located in the same region or data center, while the secondary peaks around 80–120 ms and 200–250 ms reflect transcontinental routes (for instance, between North America and Europe or Asia).

In Windows 1 and 4, when both validators used the public Internet, the RTT distributions for Identity 1 and Identity 2 largely overlap. Their medians mostly coincide, and the overall shape of the distribution is very similar, confirming that the two machines experience comparable baseline conditions and that intrinsic hardware or routing differences are negligible.

A mild divergence appears in Windows 6 and 7, when Identity 2 is connected through DZ. The median RTT of Identity 2 shifts slightly to the right, indicating a small increase in the typical round-trip time relative to the public Internet baseline. This shift is primarily driven by a dilution of the fast peer group: the density of peers within the 10–20 ms range decreases, while that population redistributes toward higher latency values, up to about 50–70 ms. For longer-distance modes (around 80–100 ms and beyond) it seems the RTT is largely unaffected.

Overall, rather than a uniform improvement, these distributions suggest that DZ introduces a small increase in gossip-layer latency for nearby peers, possibly reflecting the additional routing path through the DZ tunnel.

When focusing exclusively on validator peers, the distributions confirm the effect of DZ on nearby peers (below 50 ms). However, a clearer pattern emerges for distant peers—those with RTTs exceeding roughly 70–80 ms. In Windows 6 and 7, where Identity 2 is connected through DZ, the peaks in the right tail of the PDF shifts to te left, signaling a modest but consistent reduction in latency for long-haul validator connections.

Despite this gain, the behaviour of median RTT remains almost unaffected: it increases when connecting to DoubleZero. Most validators are located within Europe, so the aggregate distribution is dominated by short- and mid-range connections. Consequently, while DZ reduces latency for a subset of geographically distant peers, this improvement is insufficient to significantly shift the global RTT distribution’s central tendency.

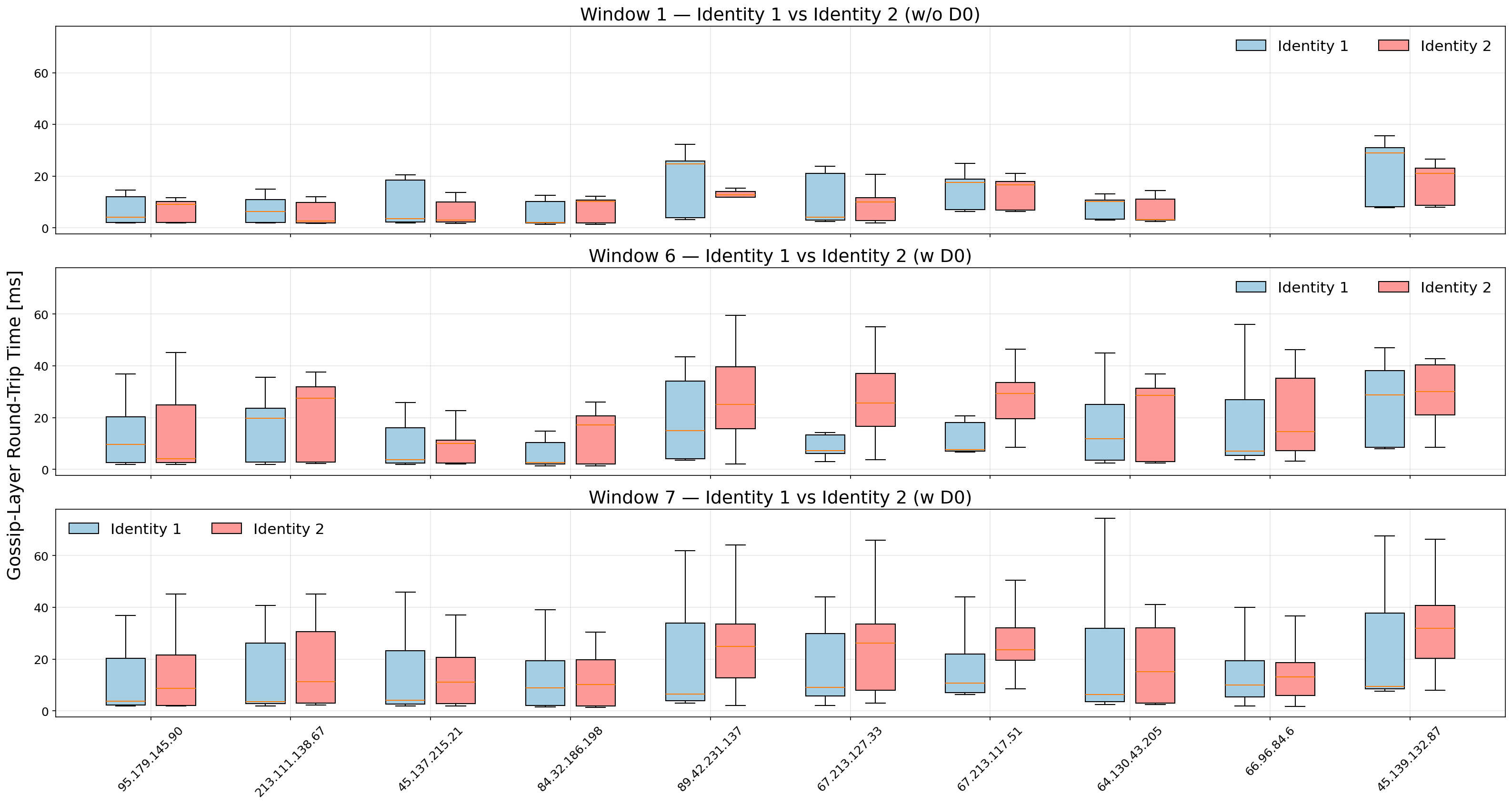

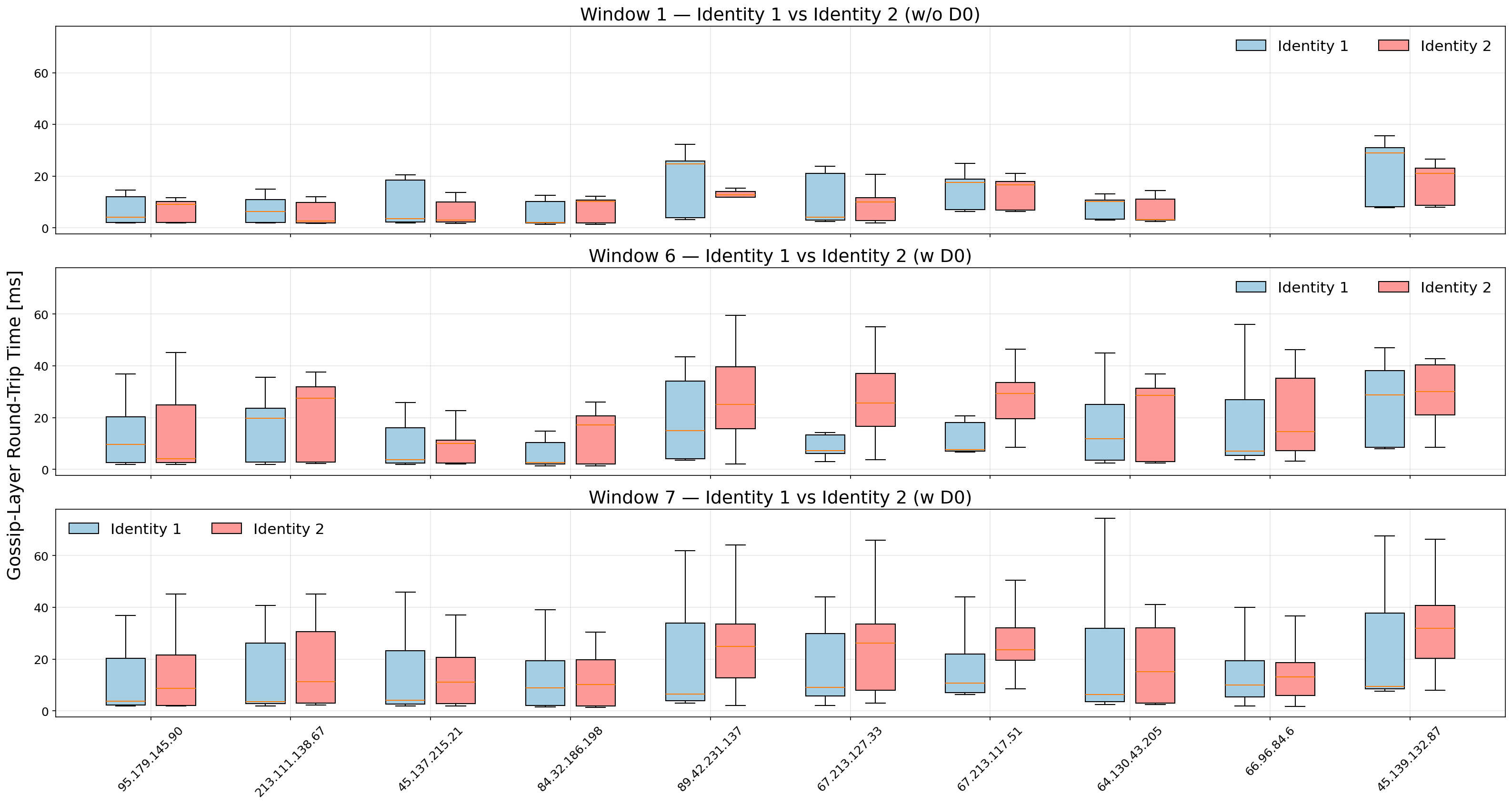

In order to better visualize the effect highlighted from Fig. 2, we can focus on individual validator peers located within the 60 ms latency range, see Fig. 3. These results confirm that the modest rightward shift observed in the aggregate distributions originates primarily from local peers, whose previously low latencies increase slightly when routed through DZ. For example, the peer 67.213.127.33 (Amsterdam) moves from a median RTT below 10 ms in the baseline window to above 20 ms under DZ. Similar, though less pronounced, upward shifts occur for several other nearby peers.

For distant validators, the introduction of DoubleZero systematically shifts the median RTT downward, see Fig. 4. This improvement is especially evident for peers such as 15.235.232.142 (Singapore), where the entire RTT distribution is displaced toward lower values and the upper whiskers contract, suggesting reduced latency variance. The narrowing of the boxes in many cases further implies improved consistency in round-trip timing.

Taken together, these results confirm that DZ preferentially benefits geographically distant peers, where conventional Internet routing is less deterministic and often suboptimal. The impact, while moderate in absolute terms, is robust across peers and windows, highlighting DZ’s potential to improve inter-regional validator connectivity without increasing jitter.

Overall, these results capture a snapshot of Double Zero’s early-stage performance. As we will show in the next subsection, the network has improved markedly since its mainnet launch. A comparison of MTR tests performed on October 9 and October 18 highlights this evolution. Initially, routes between Amsterdam nodes (79.127.239.81 → 38.244.189.101) involved up to seven intermediate hops with average RTTs around 24 ms, while the Amsterdam–Frankfurt route (79.127.239.81 → 64.130.57.216) exhibited roughly 29 ms latency and a similar hop count. By mid-October, both paths had converged to two to three hops, with RTTs reduced to ~2 ms for intra-Amsterdam traffic and ~7 ms for Amsterdam–Frankfurt. This reduction in hop count and latency demonstrates tangible routing optimization within the DZ backbone, suggesting that path consolidation and improved internal peering have already translated into lower physical latency.

In Solana, the time required to receive all shreds of a block offers a precise and meaningful proxy for block propagation time. Each block is fragmented into multiple shreds, which are transmitted through the Turbine protocol, a tree-based broadcast mechanism designed to efficiently distribute data across the validator network. When a validator logs the shred_insert_is_full event, it indicates that all expected shreds for a given slot have been received and reassembled, marking the earliest possible moment at which the full block is locally available for verification. This timestamp therefore captures the network component of block latency, isolated from execution or banking delays.

However, the measure also reflects the validator’s position within Turbine’s dissemination tree. Nodes closer to the root—typically geographically or topologically closer to the leader—receive shreds earlier, while those situated deeper in the tree experience higher cumulative delays, as each hop relays shreds further downstream. This implies that differences in block arrival time across validators are not solely due to physical or routing latency, but also to the validator’s assigned role within Turbine’s broadcast hierarchy. Consequently, block arrival time must be interpreted as a convolution of propagation topology and network transport performance.

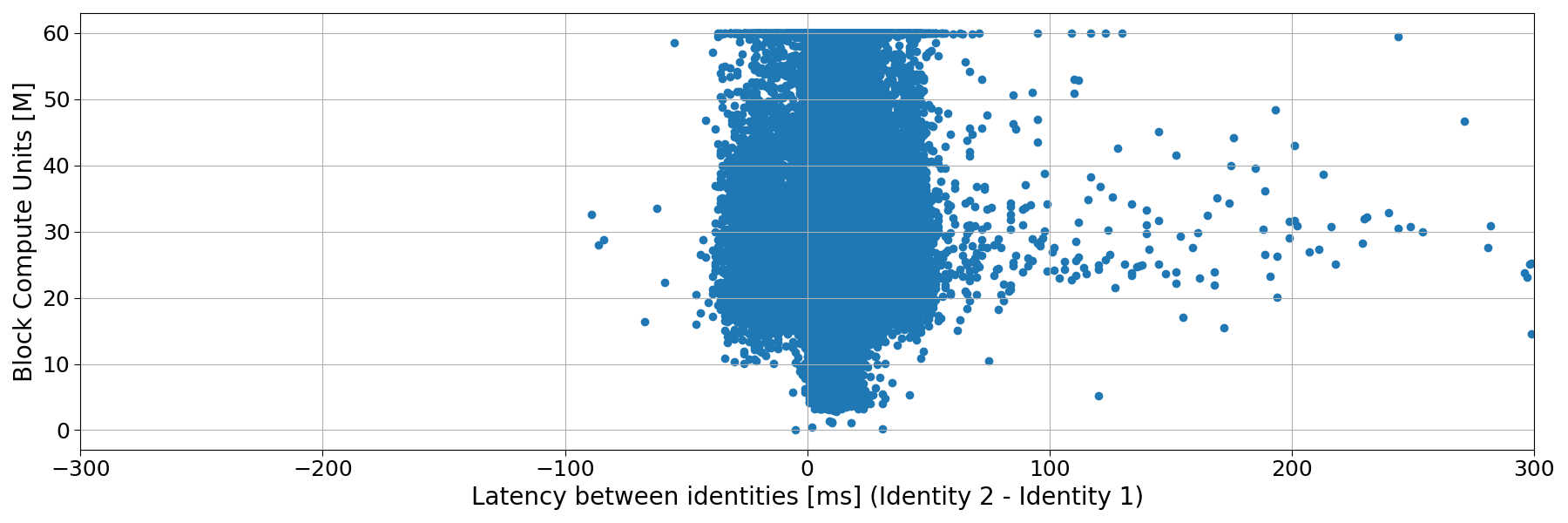

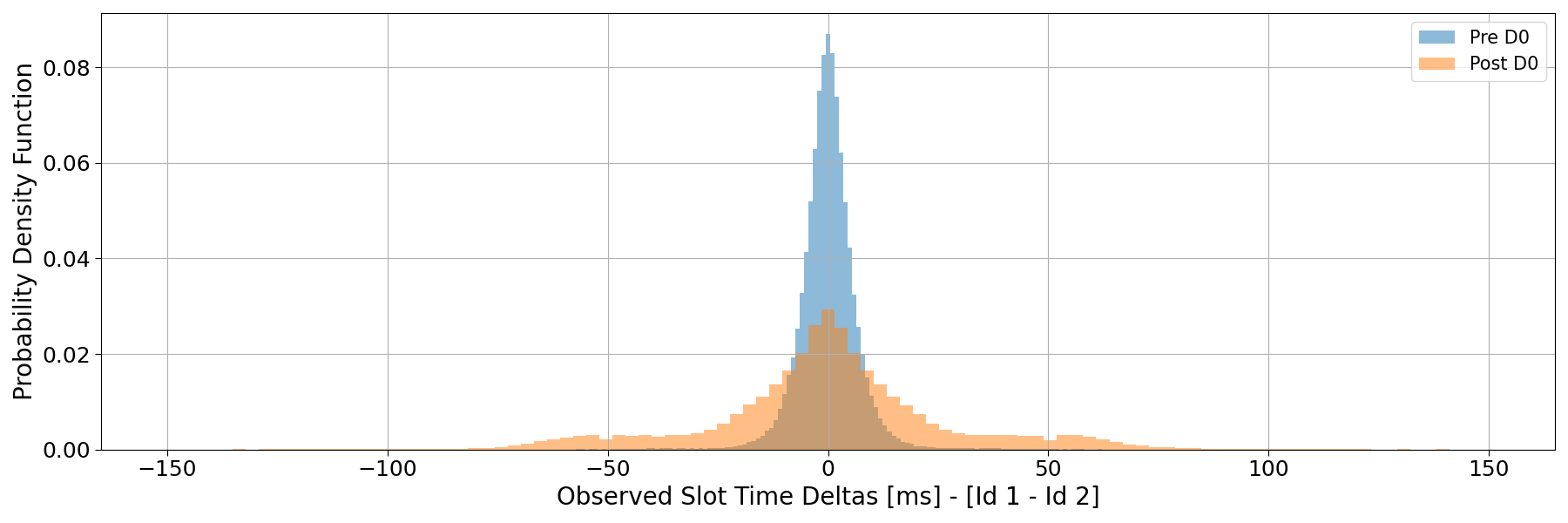

The figure below presents the empirical probability density functions (PDFs) of latency differences between our two validators, measured as the time difference between the shred_insert_is_full events for the same slot. Positive values correspond to blocks arriving later at Identity 2 (the validator connected through Double Zero).

In the early windows (2 and 3), when Double Zero was first deployed, the distribution exhibits a pronounced right tail, indicating that blocks frequently arrived substantially later at the DZ-connected validator. This confirms that during the initial deployment phase, DZ added a measurable delay to block dissemination, consistent with the higher peer latency observed in the gossip-layer analysis.

Over time, however, the situation improved markedly. In windows 5–7, the right tail becomes much shorter, and the bulk of the distribution moves closer to zero, showing that block arrival delays through DZ decreased substantially. Yet, even in the most recent window, the distribution remains slightly right-skewed, meaning that blocks still tend to reach the DZ-connected validator marginally later than the one on the public Internet.

This residual offset is best explained by the interaction between stake distribution and Turbine’s hierarchical structure. In Solana, a validator’s likelihood of occupying an upper position in the Turbine broadcast tree increases with its stake weight. Since the majority of Solana’s stake is concentrated in Europe, European validators are frequently placed near the top of the dissemination tree, receiving shreds directly from the leader or after only a few hops. When a validator connects through Double Zero (DZ), however, we have seen that EU–EU latency increases slightly compared to the public Internet. As a result, even if the DZ-connected validator occupies a similar topological position in Turbine, the added transport latency in the local peer group directly translates into slower block arrival times. Therefore, the persistent right-skew observed in the distribution is primarily driven by the combination of regional stake concentration and the modest latency overhead introduced by DZ in short-range European connections, rather than by a deeper tree position or structural topology change.

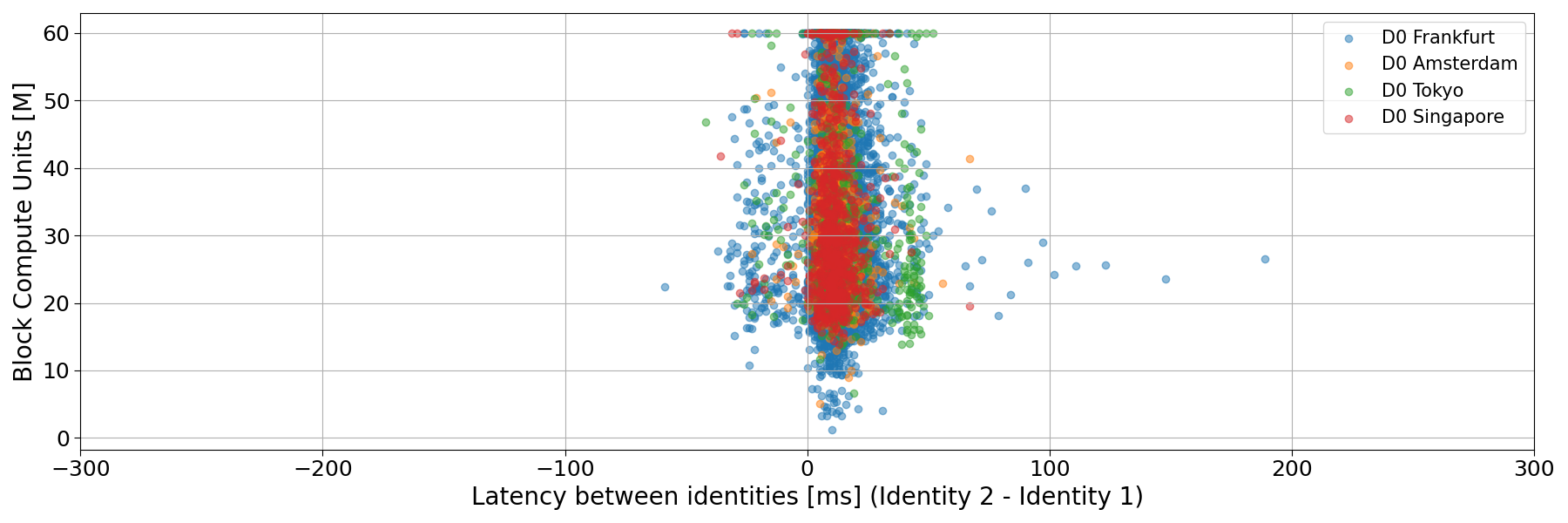

To further assess the geographical component of Double Zero’s (DZ) impact, we isolated slots proposed by leaders connected through DZ and grouped them by leader region. For leaders located in more distant regions such as Tokyo, DZ provides a clear advantage: the latency difference between identities shifts leftward, indicating that blocks from those regions are received faster through DZ. Hence, DZ currently behaves as a latency equalizer, narrowing regional disparities in block dissemination rather than uniformly improving performance across all geographies.

Finally, we see no correlation between block size (in terms of Compute Units) and propagation time.

It has been demonstrated that timing advantages can directly affect validator block rewards on Solana, as shown in prior research. However, in our view, this phenomenon does not translate into an organic, system-wide improvement simply by reducing latency at the transport layer. The benefit of marginal latency reduction is currently concentrated among a few highly optimized validators capable of exploiting timing asymmetries through sophisticated scheduling or transaction ordering strategies. In the absence of open coordination mechanisms—such as a block marketplace or latency-aware relay layer—the overall efficiency gain remains private rather than collective, potentially reinforcing disparities instead of reducing them.

Since any network perturbation can in principle alter the outcome of block rewards, we tested the specific effect of DoubleZero on extracted value (EV). Building on the previously observed changes in block dissemination latency, we examined whether routing through DZ produces measurable differences in block EV—that is, whether modifying the underlying transport layer influences the value distribution of successfully produced slots.

Figure 9 compares validators connected through DoubleZero with other validators in terms of block fees, used here as a proxy for extracted value (EV). Each line represents a 24-hour sliding window average, while shaded regions correspond to the 5th–95th percentile range. Dashed lines on the secondary axis show the sample size over time.

Before October 9, DZ-connected validators exhibit a slightly higher mean and upper percentile (p95) for block fees, together with larger fluctuations, suggesting sporadic higher-value blocks. During the market crash period (October 10–11), this difference becomes temporarily more pronounced: the mean block fee for DZ-connected validators exceeds that of others, with the p95 moving widely above. After October 11, this pattern disappears, and both groups show virtually identical distributions.

To verify whether the pre–October 9 difference and the market crash upside movement reflected a real effect or a statistical artifact, we applied a permutation test on the average block fee, cfr. Dune queries 5998293 and 6004753. This non-parametric approach evaluates the likelihood of observing a mean difference purely by chance: it repeatedly shuffles the DZ and non-DZ labels between samples, recalculating the difference each time to build a reference distribution under the null hypothesis of no effect.

The resulting mean block fee delta of 0.00043 SOL with p-value of 0.1508 during the period pre–October 9 indicates that the observed difference lies well within the range expected from random sampling. In other words, the apparent gain is not statistically meaningful and is consistent with sample variance rather than a causal improvement due to DZ connectivity. When restricting the analysis to the market crash period (October 10–11), the difference in mean extracted value (EV) between DZ-connected and other validators becomes more pronounced. In this interval, the mean block fee delta rises to $\sim$ 0.00499 SOL, with a corresponding p-value of 0.0559. This borderline significance suggests that under high-volatility conditions—when order flow and transaction competition intensify—reduced latency on long-haul routes may temporarily yield measurable EV gains.

However, given the limited sample size and the proximity of the p-value to the 0.05 threshold, this result should be interpreted cautiously: it may reflect short-term network dynamics rather than a persistent causal effect. Further tests across different volatility regimes are needed to confirm whether such transient advantages recur systematically.

This study assessed the effects of Double Zero on the Solana network through a multi-layer analysis encompassing gossip-layer latency, block dissemination time, and block rewards.

At the network layer, Solana’s native gossip logs revealed that connecting a validator through DZ introduces an increase in round-trip time among geographically close peers within Europe, while simultaneously reducing RTT for distant peers (e.g., intercontinental connections such as Europe–Asia). This pattern indicates that DZ acts as a latency equalizer, slightly worsening already short paths but improving long-haul ones. Over time, as the network matured, overall latency performance improved. Independent MTR measurements confirmed this evolution, showing a sharp reduction in hop count and end-to-end delay between October 9 and October 18, consistent with substantial optimization of the DZ backbone.

At the propagation layer, analysis of shred_insert_is_full events showed that the time required to receive all shreds of a block — a proxy for block dissemination latency — improved over time as DZ routing stabilized. Early measurements exhibited longer block arrival times for the DZ-connected validator, while later windows showed a markedly narrower latency gap. Nevertheless, blocks still arrived slightly later through DZ, consistent with Solana’s Turbine topology and stake distribution: since most high-stake validators are located in Europe and thus likely occupy upper levels in Turbine’s broadcast tree, even small EU–EU latency increases can amplify downstream propagation delays.

At the economic layer, we examined block fee distributions as a proxy for extracted value (EV). DZ-connected validators displayed slightly higher 24-hour average and upper-percentile fees before October 10, but this difference disappeared thereafter. A permutation test on pre–October 10 data confirmed that the apparent advantage is not statistically significant, and therefore consistent with random variation rather than a systematic performance gain.

Overall, the evidence suggests that DZ’s integration introduces mild overhead in local connections but provided measurable improvement for distant peers, particularly in intercontinental propagation. While these routing optimizations enhance global network uniformity, their economic impact remains negligible at the current adoption level.

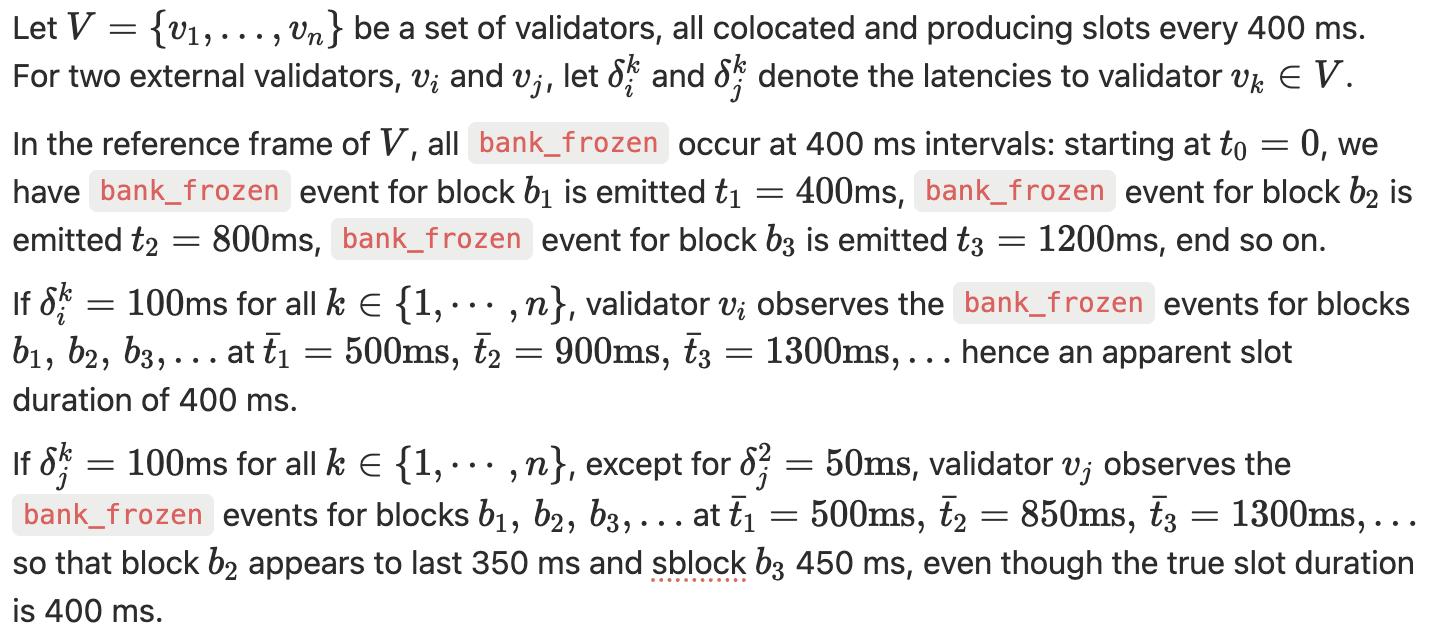

A natural candidate for measuring block arrival time in Solana is the bank_frozen log event, which marks the moment when a validator completely recover a block in a given bank. However, this signal introduces a strong measurement bias that prevents it from being used to infer true block propagation latency.

The bank_frozen event timestamp is generated locally by each validator, after all shreds have been received, reconstructed, and the bank has been executed. Consequently, the recorded time includes not only the network component (arrival of shreds) but also:

When comparing bank_frozen timestamps across validators, these effects dominate, producing a stationary random variable with zero mean and a standard deviation equal to the system jitter. This means that timestamp differences reflect internal timing noise rather than propagation delay.

Consider the following illustrative example.

In general, a local measurement for block time extracted from the bank_frozen event can be parametrized as

The figure below shows the distribution of time differences between bank_frozen events registered for the same slots across the two identities. As expected, the distribution is nearly symmetric around zero, confirming that the observed variation reflects only measurement jitter rather than directional latency differences.

A direct implication of this behaviour is the presence of an intrinsic bias in block time measurements as reported by several community dashboards. Many public tools derive slot times from the timestamp difference between consecutive bank_frozen events, thereby inheriting the same structural noise described above.

For instance, the Solana Compass dashboard reports a block time of 903.45 ms for slot 373928520. When computed directly from local logs, the corresponding bank_frozen timestamps yield 918 ms, whereas using the shred_insert_is_full events — which capture the completion of shred reception and exclude execution jitter — gives a more accurate value of 853 ms.

Similarly, for slot 373928536, the dashboard reports 419 ms, while the shred_insert_is_full–based estimate is 349 ms.