Lido v3 represents the most meaningful architectural shift in Lido’s history. Rather than simply improving liquid staking mechanics, it introduces a modular vault-based framework that fundamentally reshapes how staking interacts with DeFi. For institutions in particular, Lido v3 opens the door to configurable staking, operator choice, and native integration with on-chain strategies, all while preserving custody and control.

At Chorus One, we have been exploring the Lido v3 architecture, including building a proof-of-concept leveraged staking strategy using Morpho. This article is a technical walkthrough of Lido Vault Strategies: how they work, why they matter, and what they unlock for sophisticated stakers.

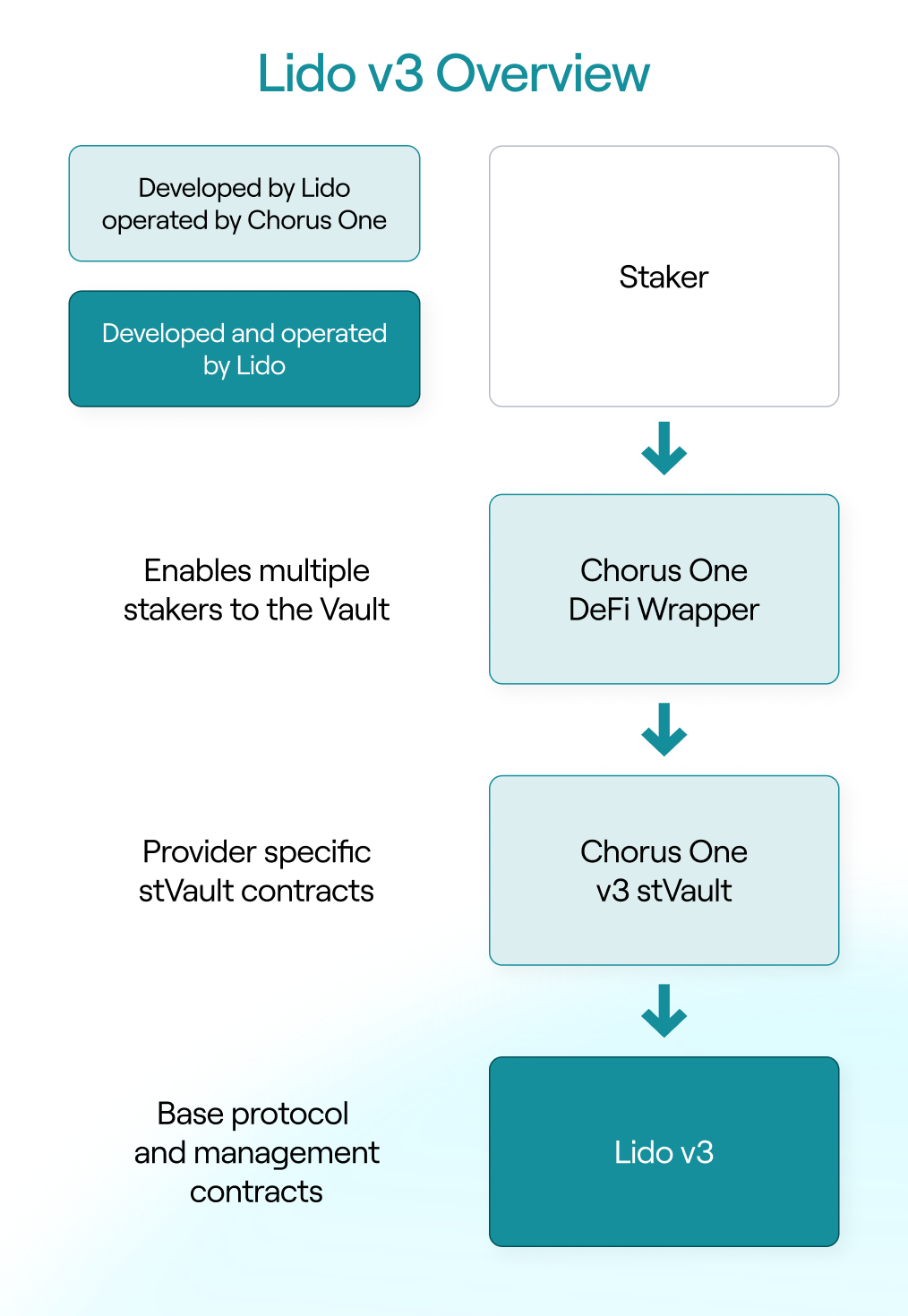

Lido v3 introduces stVaults, a new primitive that decouples staking infrastructure from token issuance and DeFi usage. At a high level, Lido v3 complements Lido Core Protocol with a layered architecture composed of three core components..

At the base is the Lido v3 protocol itself, which handles validator management, accounting, and withdrawals. On top of that sits the stVault, a vault contract deployed and operated by a specific node operator. Importantly, each stVault accepts stake from a single address only – a deliberate design choice that enables operator selection and simplifies accounting.

To enable multiple users to access the same vault, Lido introduces a DeFi Wrapper. This wrapper aggregates deposits from many users and interacts with the stVault on their behalf. When no strategy is applied, users can stake through the wrapper and receive staking exposure without any additional DeFi logic.

The shift to stVaults is not just a technical upgrade – it is a structural change that directly addresses institutional requirements.

First, stVaults allow stakers to choose their staking partner. Unlike today’s pooled Lido model, where stake is distributed across operators by the protocol, institutions using stVaults can

Explicitly select the validator they trust and align with their compliance and operational requirements.

Second, Lido v3 enables staking without minting. Institutions are no longer forced to hold liquid staking tokens on their balance sheet, eliminating accounting and custody complications while retaining full staking exposure.

Third, when minting is desired, vaults support direct minting of wstETH, removing the need for additional swaps before entering DeFi. This small design choice has meaningful implications for efficiency, gas costs, and operational simplicity.

Finally, stVaults introduce a clean interface for custom staking strategies, enabling staking and DeFi interactions to be composed into a single, auditable workflow.

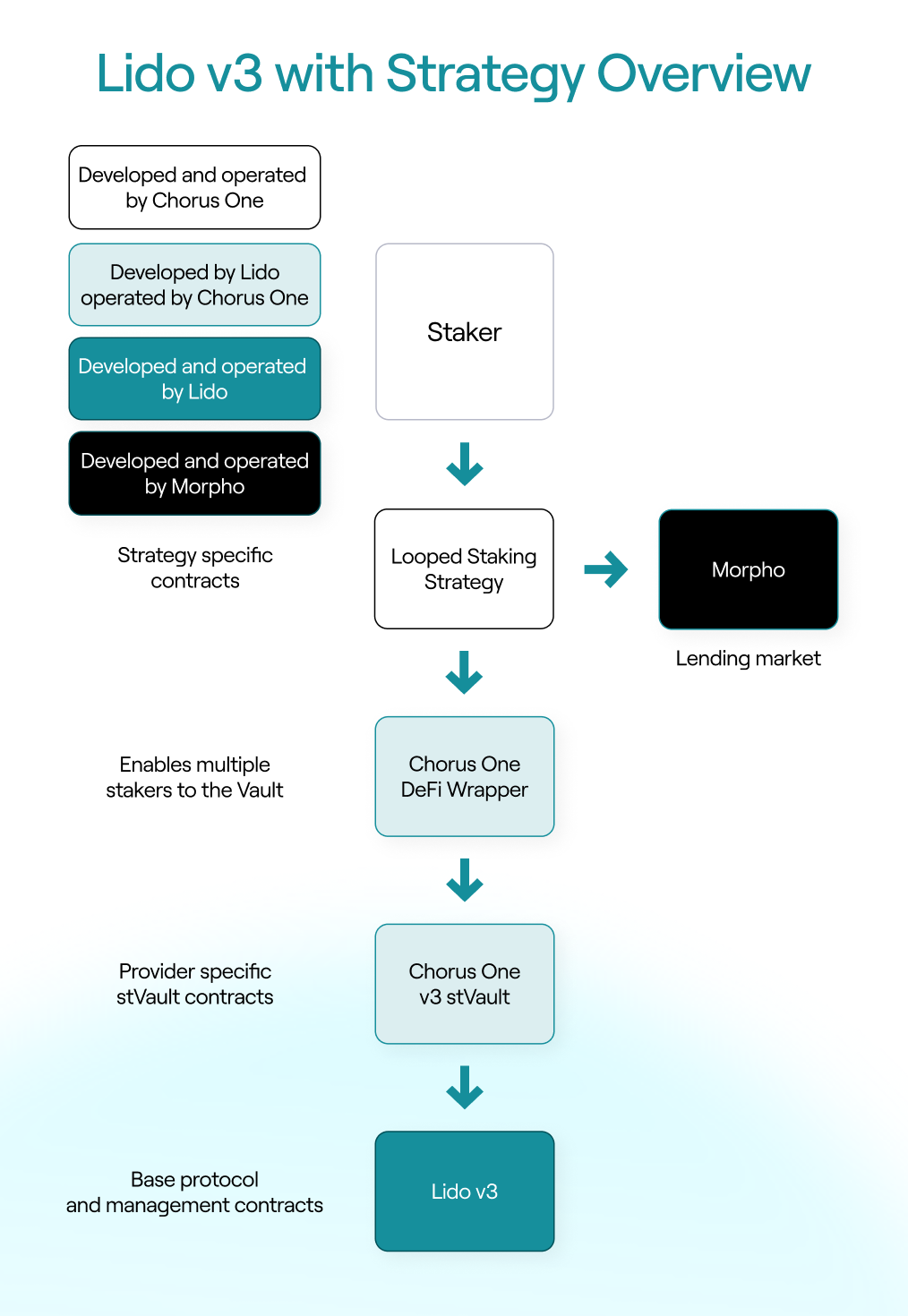

Lido Vault Strategies are optional smart contract layers placed in front of the DeFi Wrapper. Instead of interacting with the wrapper directly, users interact with the strategy contract, which orchestrates staking, minting, borrowing, and rebalancing logic on their behalf.

This design allows users to access additional on-chain opportunities, such as lending, liquidity provision, or structured yield strategies, without managing multiple contracts or transactions

themselves. Importantly, strategies are not required: a vault can operate purely as a staking vehicle. When added, however, strategies become the mechanism that enables more advanced capital usage while preserving Lido’s core security guarantees.

One of the most important implications of Lido v3 is how it simplifies connecting staking to existing DeFi venues. Protocols like Morpho have become key on-chain destinations for capital, including for crypto-native funds and increasingly for TradFi-linked participants seeking lending and borrowing exposure using assets like USDC.

What these venues generally lack is direct access to staking. Large financial institutions are comfortable with lending and collateralized borrowing, but staking remains unfamiliar territory.

Lido Vaults change this dynamic. By starting upstream with staking and flowing downstream into DeFi, vault strategies provide a natural bridge: participants already active in lending markets can begin to understand staking not as a separate activity, but as an integrated source of yield and collateral. Over time, this structure lowers the conceptual barrier to staking for institutions that otherwise would not engage with it directly.

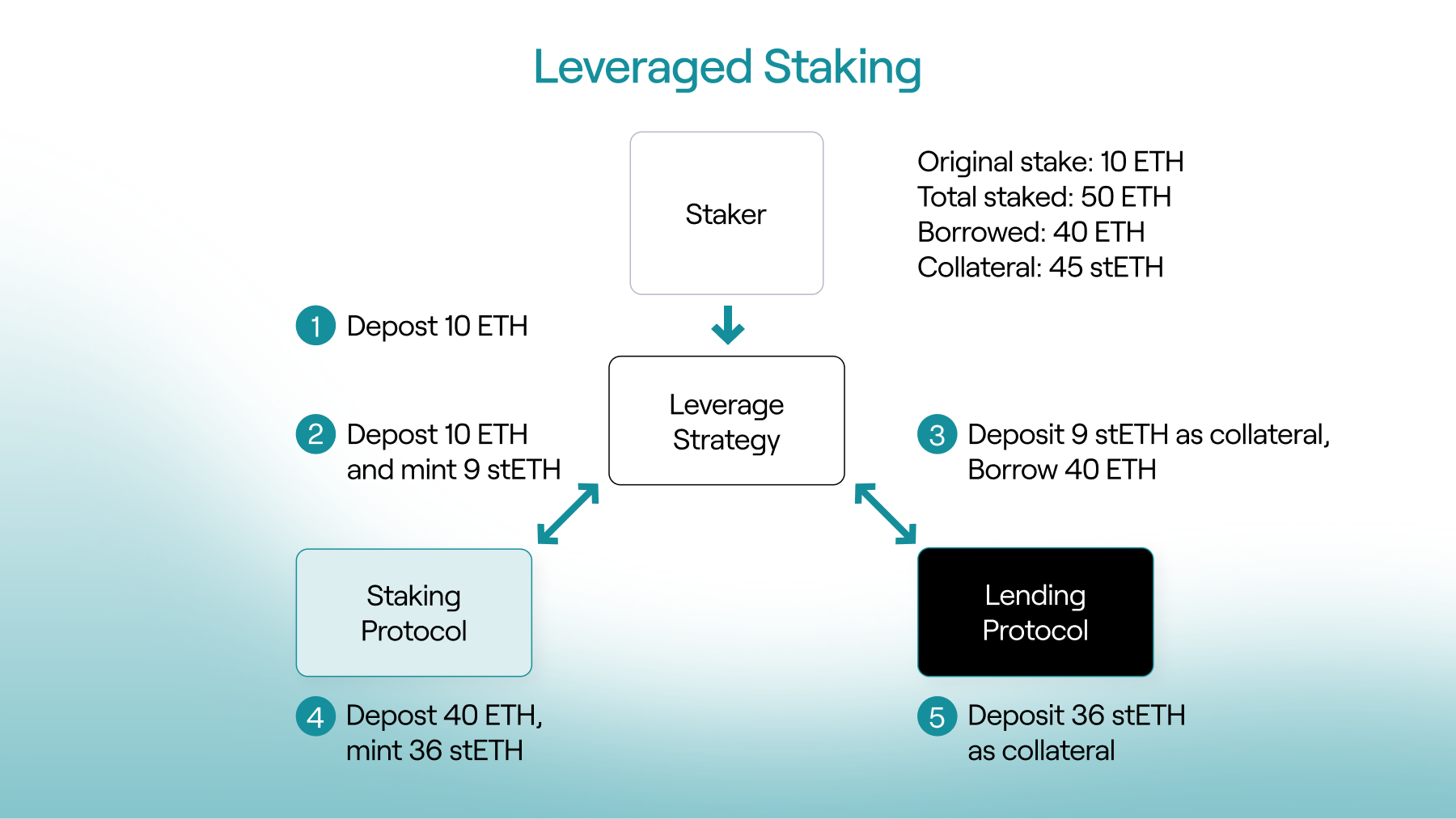

To understand the practical implications of Vault Strategies, Chorus One built an early-stage proof-of-concept leveraged staking strategy using Lido v3 and Morpho.

What is leveraged staking? Leveraged staking involves borrowing ETH against staked collateral (wstETH) and restaking the borrowed ETH to amplify staking rewards. Traditionally, this requires looping: stake, mint, borrow, restake. Lido v3 however, enables a far cleaner, less complex approach.

Using a strategy contract and Morpho’s callback architecture, we were able to design a flow where users can enter a leveraged position in a single transaction, at any target leverage level, without looping. Gas costs remain constant regardless of leverage, and contract complexity is reduced significantly.

Equally important is the exit path. One of the hardest problems in leveraged staking is unwinding positions during withdrawals. Lido v3 introduces a withdrawal-with-rebalancing feature that allows the loan to be repaid and the withdrawal to be requested in the same transaction. This means positions can be unwound immediately, without waiting days in the withdrawal queue while a loan remains outstanding.

From a technical perspective, this combination – strategy contracts, Morpho callbacks, and Lido’s rebalancing withdrawals – makes leveraged staking both capital and operationally more efficient than previous designs.

Building the strategy contract itself was feasible using largely off-the-shelf components. Lido v3 is highly composable by design, the rebalancing feature, and Morpho’s callback architecture significantly simplifies both entry and exit flows.

However, the most important lesson was that contract development is only a fraction of the work required to build an institutional-grade vault strategy.

Robust products require published risk analysis covering every protocol and asset involved, risk controls embedded directly into contracts and operational workflows, thoughtful financial structuring for downside protection, real-time monitoring with alerting and emergency procedures. They also require active financial operations, including fund rebalancing and emergency unwinds when market conditions demand it.

Recent DeFi vault failures have shown that technical sophistication alone is insufficient. Many curators can build contracts that work when conditions are favorable, but lack the risk discipline needed to operate through stress events. This is where infrastructure providers must differentiate themselves.

Lido v3 stVaults are not just a new staking interface — they are an invitation for operators to step into an active role with more visibility and responsibility. Operators are no longer anonymous infrastructure providers; they become integral participants in how staking and DeFi strategies are designed, operated, and risk-managed.

For Chorus One, this aligns closely with how we already operate across other ecosystems. As a SOC 2-compliant, ISO 27001-certified provider based in Switzerland, we see Lido v3 as a natural evolution of institutional staking rather than a departure from it.

Lido v3 stVaults are expected to launch in 2026. When they do, staking will no longer be a terminal activity: it will be the first step into programmable, risk-managed DeFi strategies that can be accessed in a single transaction.

DeFi is going institutional, and that transition starts with staking. With Lido v3, users no longer need to choose between staking yield and DeFi participation. They can have both – cleanly, transparently, and with operator accountability.

At Chorus One, we are excited to bring these strategies to market, beginning with public vaults and expanding to private, customized deployments. If you are interested in exploring Lido Vault Strategies or learning how they can be tailored to your needs, we would be glad to continue the conversation.

Chorus One, one of the world’s leading institutional staking providers, today announced a new collaboration with Ledger, the world leader in digital asset security for consumers and enterprises, through its Ledger Enterprise platform. Through this collaboration, institutions can participate in Proof-of-Stake networks without transferring custody of their digital assets, while benefiting from Chorus One’s secure, research-backed staking operations.

“Institutions need staking solutions that match their security, compliance, and operational requirements. Integrating with Ledger Enterprise allows us to deliver a streamlined staking experience that keeps governance firmly in the hands of the client while providing the performance and reliability Chorus One is known for.” - Damien Scanlon, Chief Product Officer at Chorus One.

“Companies are adopting digital assets at a rapid pace worldwide, but uncompromising security and governance remain fundamental prerequisites. By integrating Chorus One’s staking infrastructure into the Ledger Enterprise platform, we make it simpler for institutions to earn staking yields with security and governance. This partnership delivers the best of both worlds: high-performance staking combined with robust self-custody.” - Sébastien Badault, Executive Vice President, Ledger Enterprise

The Ledger Enterprise platform provides hardware-secured private key protection, policy-based governance, multi-authorization workflows, and comprehensive auditability. This integration allows staking operations, including delegation and reward management, to occur entirely within the institution’s existing governance framework.

This connection expands Chorus One’s global footprint across institutional staking and strengthens Ledger Enterprise’s offering as a governance-focused, end-to-end digital asset management platform.

About Chorus One

Chorus One is one of the largest institutional staking providers globally, operating infrastructure for over 40 Proof-of-Stake (PoS) networks, including Cosmos, Solana, Avalanche, and Near. Since 2018, the company has been at the forefront of the PoS industry, offering easy-to-use, enterprise-grade staking solutions, conducting industry-leading research, and investing in innovative protocols through Chorus One Ventures. Chorus One is an ISO27001 certified provider for institutional digital asset staking.

About Ledger

Celebrating its 10 year anniversary in 2024, Ledger is the world leader in Digital Asset security for consumers and enterprises. Ledger offers connected devices and platforms, with more than 8M devices sold to consumers in 165+ countries and 10+ languages, 100+ financial institutions and commercial brands. Over 20% of the world’s crypto assets are secured by Ledger.

Ledger is the digital asset solution secure by design. The world’s most internationally respected offensive security team, Ledger Donjon, is relied upon as a crucial resource for securing the world of Digital Assets. With over 14 billion dollars hacked, scammed or mismanaged in 2023 alone, Ledger’s security brings peace of mind and uncompromising self-custody to its community.

Don’t buy “a hardware wallet.” Buy a LEDGER™ signer.

LEDGER™, LEDGER WALLET™, LEDGER RECOVER™, LEDGER STAX™, LEDGER FLEX™ and LEDGER NANO™ are trademarks owned by Ledger SAS

Bluetooth® word mark and logos are registered trademarks owned by Bluetooth SIG, Inc. and any use of such marks by Ledger is under license.

E Ink® is a registered trademark of E Ink Corporation.

The Stacks ecosystem continues to evolve rapidly as it builds a powerful Bitcoin-aligned smart contract layer. With the launch of Dual Stacking, Stacks introduces one of its most notable protocol improvements to date: a mechanism designed to boost decentralization, improve capital efficiency, and strengthen user participation in securing the network.

At Chorus One, we are pleased to support this upgrade and help institutions and delegators tap into Stacks’ growing ecosystem with the same reliability and operational excellence we bring to all Proof-of-Stake networks.

Dual Stacking is a new mechanism that allows the Stacks network to operate with two simultaneous consensus roles:

In other words, instead of relying solely on STX to secure the network, Stacks introduces a dual-asset participation model where STX and sBTC both play distinct but complementary roles.

This ensures that economic security grows alongside real Bitcoin liquidity entering the ecosystem, aligning incentives more closely with the long-term vision of Bitcoin-anchored smart contracts.

Dual Stacking strengthens the Stacks network by distributing security across two asset types. Instead of relying solely on STX participation, the blockchain now benefits from users who contribute sBTC as well. This approach ties the network’s growth directly to the presence of real Bitcoin liquidity, making the underlying security more resilient and more aligned with Stacks’ long-term vision.

This upgrade offers a more flexible pathway for participation. STX holders can continue stacking as before, locking their tokens to earn BTC rewards contributed by miners. Meanwhile, sBTC holders, who may prefer exposure to Bitcoin rather than STX, gain an entirely new way to contribute to the network’s economic security. This flexibility broadens the potential user base and makes room for different treasury strategies, especially for institutions managing diversified portfolios.

One of the most compelling aspects of Dual Stacking is that it allows participants to keep their assets productive without forcing them into a single asset class. STX holders can continue earning BTC, while sBTC holders can contribute security using a Bitcoin-pegged asset that they may already hold for other purposes. This dual-path approach improves capital efficiency without compromising decentralization or the economic guarantees of the network.

With sBTC becoming a core component of network security, Stacks deepens its commitment to being a true Bitcoin Layer. By allowing a BTC-pegged asset to participate directly in consensus economics, the network becomes even more intertwined with the Bitcoin ecosystem, both technologically and financially.

In practice, Dual Stacking operates through two parallel participation tracks that both contribute to the same consensus process. STX holders lock their tokens in a familiar stacking cycle and earn Bitcoin rewards, just as they do today. Meanwhile, sBTC holders lock their sBTC for a defined period, contributing to network security through a separate mechanism designed specifically for Bitcoin-pegged collateral.

Although the two tracks function differently, they both reinforce the same underlying network guarantees. They also expand the validator and operator environment, creating new responsibilities and opportunities for infrastructure providers like Chorus One.

Chorus One is proud to support Stacks through this transition and help institutions and token holders navigate the opportunities it creates. Our infrastructure is built to meet the demands of high-performance, high-availability networks, and our experience across more than 40 Proof-of-Stake ecosystems positions us to deliver reliable, secure operations for STX stacking, and future Dual Stacking pathways as they become available.

We bring ISO 27001-certified and SOC2 compliant security practices, research expertise, and dedicated institutional support to ensure that participants can engage in Stacks with confidence and clarity.

Dual Stacking marks a milestone for the Stacks ecosystem, introducing a dual-asset model that enhances security, increases flexibility, and directly aligns the network with Bitcoin’s economic gravity. The update creates new opportunities for participants, improves capital efficiency, and positions Stacks for the next phase of growth as a Bitcoin-native smart contract layer.

Chorus One looks forward to supporting this new era and helping institutions and delegators make the most of Stacks’ innovative approach to network participation.

We would like to extend our congratulations to Monad on the successful steps toward its mainnet launch – a milestone that marks the beginning of a new era for high-performance, Ethereum-compatible infrastructure. As the ecosystem prepares for this next chapter, we are excited to announce a new integration with Bitget, one of the world’s leading universal exchanges (UEX), to make Monad (MON) staking accessible to users across global markets.

This collaboration brings together Monad’s breakthrough execution architecture, Bitget’s global reach of 120M users, and Chorus One’s institutional-grade staking expertise, creating a seamless, secure, and highly scalable gateway for early participation in the Monad network.

Monad’s design introduces a leap in execution performance, pairing parallelized transaction processing with MonadBFT consensus to deliver low-latency, high-throughput computation while maintaining full EVM compatibility. As anticipation builds for mainnet, demand for safe, transparent, and reliable staking pathways continues to grow.

However, during Monad’s initial phase, direct staking will be limited, and access will occur primarily through trusted partners. This is where the Chorus One and Bitget integration plays a critical role: it ensures that users can enter the ecosystem through an interface they already trust, backed by infrastructure built for institutional reliability.

Through this integration, Bitget users will gain access to MON staking via Chorus One’s validator infrastructure, benefitting from:

Bitget will route staking operations to Chorus One’s infrastructure, ensuring that delegators participate through a highly resilient validator with a multi-year track record of zero slashing events across 40+ networks.

Staking rewards will be displayed directly within Bitget’s interface, offering a streamlined experience for users who prefer not to manage on-chain delegation flows themselves.

As Monad does not auto-compound staking rewards, users will have the option to manually compound earnings through Bitget’s interface or other integrated tools. This gives full control over reward management while preserving opportunities for optimized yield strategies.

With Bitget’s strong presence in APAC, Europe, and emerging markets, the partnership ensures broad accessibility to MON staking from day one, especially for users who may not otherwise have access to early delegation options.

Complementing the staking integration, Bitget has also launched the MON On-Chain Earn product on November 30. This offering provides users with an accessible way to participate in Monad’s on-chain economy. Users can easily join through Bitget’s dedicated On-Chain Earn interface and start earning on their MON holdings. Click here to join the campaign now!

At Chorus One, our mission is to empower participation in next-generation decentralized protocols through:

For the Bitget integration, we provide infrastructure with continued monitoring, optimization, and reporting to ensure a smooth delegation experience as the ecosystem matures.

Bitget’s influence as a global universal exchange, combined with Chorus One's proven validator track record, helps strengthen the foundation of the Monad network even before its full launch. By lowering the barrier to entry and offering a compliant, scalable on-ramp, this integration helps ensure the validator set grows in decentralization, stability, and geographic diversity.

And as Monad prepares to introduce its high-performance EVM to the world, expanding staking accessibility is a crucial part of building a resilient and secure network from day one.

Monad’s arrival represents a transformative moment for the EVM ecosystem, delivering performance gains without sacrificing compatibility or developer experience. The integration between Chorus One and Bitget is only the first step in a long-term commitment to supporting the network with high-quality staking infrastructure, research insights, and ecosystem collaboration.

We’re proud to contribute to Monad’s journey, and equally proud to help users around the world participate safely and effectively in its early stages.

If you’re a platform, wallet, or institution looking to enable MON staking, our team is ready to support your integration.

Contact us to learn more about partnering with Chorus One for Monad staking.