At Chorus One, we’ve been long time supporters of the Cosmos ecosystem: we were among the genesis validators for the Cosmos Hub and multiple other flagship appchains, contributed cutting edge research on topics such as MEV, actively participate in governance, and invested in over 20 Cosmos ecosystem projects through our venture arm such as Celestia, Osmosis, Anoma, Stride, Neutron.

The Cosmos vision resonated with many for different reasons. It offered a technology stack that empowered user and developer sovereignty, enabled a multichain yet interoperable ecosystem, and prioritized decentralization without compromising on security. Since its inception, each component of the Cosmos stack on a standalone basis could command multi-billion-dollar outcomes if properly commercialized:

Additionally, many ideas that are now widely recognized were first pioneered by the Cosmos ecosystem before gaining mainstream adoption.

However, the Cosmos Hub has been plagued with divided strategic direction, governance drama, little focus on driving value accrual and commercialisation, and slow iteration leading it to being outcompeted by its more agile, product-focused peers, that now have far exceeded the Hub in terms of performance, developer ecosystem, applications, and ultimately economic value creation.

Some initiatives didn’t get the results expected for the Hub, and one example is Interchain Security. Early on, we raised concerns that this model could lead to more centralization, and then explained the economic issues with Replicated Security. Alternatives like Mesh Security, Babylon, and EigenLayer have since entered the space, offering teams a wider range of shared security models to choose from. As a result, only two chains adopted ICS: Neutron and Stride. Recently, Neutron decided to leave ICS, leaving Stride as the only remaining ICS chain that will use Replicated Security from now on.

A major change in Cosmos was long overdue, and we believe it began with the acquisition of Skip Inc. The team rebranded as Interchain Labs, a subsidiary of the Interchain Foundation (ICF), now leading Cosmos’ product strategy, vision, and go-to-market efforts.

In this piece, we seek to present the bull case for what’s ahead for Cosmos, driven by a reformed focus on the Cosmos Hub and ATOM as the center of the ecosystem, internalized development across the Interchain Stack, and a north star centered around users and liquidity across Cosmos.

Between 2022 and 2024, the Cosmos Hub and the Cosmos ecosystem as a whole needed a breath of fresh air and a new team with both the execution skills and vision to revive the ecosystem and make it exciting again. More importantly, a team that was completely independent from the conflicts and divisions that were created within the ecosystem. It was time to leave politics behind and focus on uniting the community under one clear vision.

The team that brought this fresh and new vision is Skip. Co-founded by Maghnus Mareneck and Barry Plunkett in 2022, Skip became a key contributor in the Cosmos ecosystem by working on areas such as MEV and cross-chain interoperability. In just 2 years, the Skip team has made meaningful contributions to the ecosystem by building tools and software such as:

In December 2024, Skip was acquired by the Interchain Foundation, marking a historical moment for both Skip and the Cosmos ecosystem. Rebranded as Interchain Labs, Skip became a subsidiary responsible for driving product development, executing strategy, and accelerating ecosystem growth across Cosmos.

We see this as a bullish turning point in Cosmos for three reasons:

Skip’s new mandate is to position the Cosmos Hub as the primary growth engine and liquidity center of the ecosystem. This represents a significant shift in strategy, with ATOM evolving from a governance-focused token to the core asset underpinning value capture across the entire stack. At Chorus One, we’ve worked with Barry and Maghnus since the early Skip days as an investor and operator and saw Skip become a dominant player for MEV within Cosmos and a market leader in IBC transactions via Skip.go. We couldn’t think of a better team to drive the Cosmos Hub.

The Cosmos ecosystem relies heavily on IBC, its native protocol for blockchain interoperability. However, IBC has mainly been adopted by relatively homogeneous blockchains, most of which use CometBFT and the Cosmos SDK. Implementing IBC is complex, as it requires a light client, a connection handshake, and a channel handshake. This makes it especially difficult to integrate with environments like Ethereum, where strict gas metering adds additional constraints.

In March 2025, IBC v2 was announced. It simplifies cross-chain communication, reduces implementation complexity, and extends compatibility between chains. The main features we’re excited about with the integration of IBC v2 and the broader vision for the Cosmos Hub are:

With IBC v2, we believe the Cosmos Hub can become the main router for the entire Interchain. IBC Eureka is the upgrade that brings IBC v2 to the Cosmos Hub. It simplifies cross-chain connections by routing transactions between Cosmos and non-Cosmos chains through the Cosmos Hub.

IBC Eureka aims to increase demand for ATOM as it encourages routing via the Hub. In the future, one possible mechanism under discussion is using fees collected on these transactions to buy and burn ATOM, a model that could both reduce supply and create sustained demand. Although fees haven’t been decided yet, the framework establishes a foundation for potential value accrual to ATOM through real usage.

IBC currently is already a top 5 bridging solution by volume, with the most (119) connected chains, before establishing connections with major established ecosystems such as Ethereum and Solana. With this vision, the Cosmos Hub is becoming a central hub for the interchain economic activity, serving as a main Hub for bridging and competing directly with solutions like Wormhole or LayerZero.

Historically, Cosmos was plagued by user experience problems. Although its initial vision was to become a lightweight demonstration of the Cosmos stack, it also lacked features that other competitive alt-layer 1s have become known for, such as smart contract programmability, interoperability with external networks, high-performance throughput, and fast block times.

IBC v2 aims to address interoperability between Cosmos and exogenous chains. However, with the increased importance of Cosmos as a router for IBC, the hub itself also needs a native ecosystem to flourish and differentiate itself from its peers. The ICF recently funded the open-sourcing of evmOS, an Ethereum-compatible Layer 1 solution that allows developers to build EVM-powered blockchains utilizing the Cosmos SDK. The library will be renamed to Cosmos EVM, and maintained by Interchain Labs as the primary EVM-compatibility solution of the Interchain Stack. With this move, the Cosmos Hub now has a meaningfully viable path to becoming EVM-compatible and build a surrounding ecosystem, enabling and improving the IBC experience.

Lastly, the updated product direction will enable a focused approach on performance, catering to newly onboarded liquidity and users. The average block time of Cosmos Hub is currently ~5.5 seconds, and given the nature of interchain transactions often being cross-chain and requiring a minimum of 2 block confirmations, the average IBC transaction ends up being >20 seconds, which is a suboptimal user experience when the objective is for users have an abstracted experience when interacting with any Cosmos chain.

Optimizations can be done to CometBFT to reduce this to around 1 second or even less. With a 1-second block time, crosschain transactions can reach finality within 2 seconds, massively improving the interchain experience. We believe that this is the path Cosmos Hub will take, with a focus on performance in the near future.

As the Cosmos Hub advances toward becoming a service-oriented, multichain platform, a major priority is addressing the persistent challenge of liquidity fragmentation. While IBC enables seamless interoperability across chains, the Cosmos Hub still lacks a native trading layer that can handle efficient multichain liquidity routing.

Stride, a protocol recognised for its early success in liquid staking, has recently announced an expansion of its mission to address this critical infrastructure gap. With support from the Interchain Foundation, Stride is building a new DEX optimized for IBC Eureka, the native routing system of the Cosmos Hub. This new product will serve as the foundation for onboarding, bridging, and seamless asset movement across Cosmos and external ecosystems like Ethereum, Solana, and others.

Stride is purpose-built for multichain trading. It enables atomic swaps across networks without the need to bridge assets or switch wallets. The protocol supports all tokens, chains, and wallets integrated with IBC Eureka, and offers features like dynamic fees, permissionless hooks, and deep routing. Stride will also offer vertically integrated liquid staking, built-in vaults, and liquidity pools designed for both user flexibility and capital efficiency. The team already has 8 figures in liquidity commitments.

With this partnership, Stride is optimizing for the Cosmos:Go router, reinforcing its role as the Hub’s native liquidity router. In return, Stride has committed to sharing 20% of its protocol revenue with ATOM holders and building fully on the Cosmos Hub, while remaining independent and continuing to operate its existing products. This makes Stride both an economic and technical pillar in the Hub’s long-term roadmap, positioned to become a core DeFi player for Cosmos.

From a market perspective, the opportunity is large. Stride’s liquid staking product already serves over 136,000 users and secures significant value across multiple chains. By integrating liquid staking with its new DEX, and enabling features such as cross-selling, rehypothecation, and collateralized DeFi, Stride is expanding its total addressable market. Stride isn’t just launching a new product. It’s building key infrastructure that helps Cosmos Hub become the economic center of the multichain world.

The market has consistently shown that general-purpose Layer 1 blockchains are the primary drivers of value creation. Networks like Ethereum, Solana, Binance Chain, Sui, and, more recently, Hyperliquid have demonstrated that ecosystems with composability, programmability, and strong liquidity attract developers, users, and value accrual.

In Cosmos, a clear shift is emerging. The recent decision by Stride to deploy directly on the Cosmos Hub signals a new direction: some appchains are starting to rethink the need for sovereign infrastructure. Instead, they are exploring the benefits of building directly on the Hub’s upcoming permissionless VM layer, gaining access to shared liquidity, execution environments, and ATOM alignment without the overhead of maintaining an independent chain.

A recent example of this trend is Stargaze, a well-known NFT app chain. Discussions are underway to migrate Stargaze to the Cosmos Hub and convert STARS into ATOM. Such a move would align Stargaze more closely with the Cosmos Hub’s economic and governance layers, while bringing a Tier-1 Cosmos application to the Cosmos Hub. This direction, while ultimately subject to decentralized governance, reflects a growing recognition that Cosmos Hub-native applications could benefit from tighter integration and shared growth.

This marks a turning point for Cosmos. For years, teams were encouraged to launch sovereign chains. But in a competitive Layer 1 environment, fragmentation has become a liability. By consolidating applications on a unified execution layer, Cosmos Hub has the opportunity to increase activity on its L1, strengthen ATOM’s value capture, and present a more cohesive ecosystem to the broader crypto market.

With a permissionless VM, application migration, and deeper alignment through ATOM, the Cosmos Hub is becoming a competitive base layer capable of hosting top-tier applications and standing alongside the most successful general-purpose blockchains in the space.

Like many others, we were first drawn to Cosmos for its egalitarian vision: a multichain, interoperable future that prioritizes user and developer sovereignty, permissionlessness, and trust minimization. Like any emerging technology, it faced setbacks and challenges over the years. But behind the politics and drama was a tech stack that stayed true to the core values of decentralization. We couldn’t be more excited to support Barry, Maghnus, and the Interchain Labs team, along with teams like Stride, as Cosmos embarks on its next chapter, led by none other than the first stewards of its technology: Cosmos Hub and ATOM.

Earn more by staking with Chorus One — trusted by institutions since 2018 for secure, research-backed PoS rewards.

🔗 Stake Your $INIT

Cosmos has been a pioneer in envisioning a multichain world where different blockchains can interact and communicate with each other. This vision was built around infrastructure such as the Cosmos SDK, a framework for building custom blockchains, and the IBC protocol, which facilitates interoperability between chains. This innovation laid the foundation for the Appchain thesis, where applications operate on their own blockchains, allowing for greater flexibility, sovereignty, and value capture. This initial Cosmos vision also inspired teams like Optimism, which applied similar ideas to create an ecosystem of Ethereum Layer 2s based on the OP stack.

Initia is an evolution of both the Cosmos and Ethereum/Optimism visions, introducing a new perspective on the Appchain and Multichain world, and presenting an Appchain 2.0 vision. Initia aims to offer applications all the benefits of a Layer 1 blockchain, such as flexibility, sovereignty and value capture, while overcoming challenges like technical complexities and poor user experience. Initia achieves this by providing a unified, intuitive, and interconnected platform of rollups, which will be explored further in this article.

As mentioned, Initia’s value proposition is to simplify and enhance the Multichain experience by creating a unified, interconnected ecosystem of rollups. This approach allows for seamless interaction across the various Initia Rollups (also called Interwoven Rollups, or just Rollups) and provides the benefits of Layer 1 blockchains without their associated drawbacks. Let’s take a closer look at the Initia architecture.

It is the core layer of the Initia ecosystem. The Layer 1 is based on the Cosmos SDK and uses CometBFT as its consensus mechanism. Initia uses Move for smart contracts, and its implementation of the Move Virtual Machine introduces a key innovation by tightly integrating it with the Cosmos SDK. This integration allows developers to directly call Cosmos SDK functions such as sending IBC transfers or performing interchain account actions within Move smart contracts.

IBC is the standard messaging protocol across the Cosmos ecosystem. By embedding it into the Move environment, Initia removes the need to create new Cosmos modules for these capabilities. Instead, some of this logic can now be handled within Move, allowing for more flexible and modular development.

At the Layer 1 level, Initia also includes the LayerZero module, which plays a critical role in enabling interoperability. This module acts as an endpoint, allowing communication with any other chain that supports LayerZero.

Initia Layer 1 has a system to protect assets and validate operations across its Rollups. Here’s how it works:

Initia introduces two key modules that work closely together: the DEX module and the Enshrined Liquidity module. The DEX module is a built-in decentralized exchange that allows users to trade using balancer-style pools and stableswap pools. By embedding this functionality directly into the chain, Initia provides native infrastructure for liquidity and trading without relying on external smart contracts.

Complementing this is the Enshrined Liquidity system. In Initia’s design, the native token, INIT, serves both as the governance asset and the staking token, then the stakers earn rewards generated through inflation. This inflationary payout represents the network’s security budget.

The challenge with this model is that a significant percentage of the token supply is locked into staking solely to secure the network, often without contributing to liquidity or broader ecosystem utility. Initia addresses this inefficiency by connecting staking and liquidity through the Enshrined Liquidity system, creating a more productive and aligned use of token capital.

With Enshrined Liquidity, users can stake whitelisted LP tokens on Initia from the built-in DEX module directly with validators. These LP tokens represent trading pairs such as INIT/USDC or INIT/ETH. Any trading pair on the DEX where INIT makes up more than 50% of the weight can be approved for Enshrined Liquidity via governance, and each pair can be assigned a specific reward weight.

For example, if the INIT/USDC pair is allocated 60% of the staking rewards and the INIT/ETH pair receives 40%, these LP tokens become the main way rewards are distributed. This system turns Initia’s Layer 1 into a deep liquidity hub that supports the entire ecosystem, including all Rollups built on top of it.

Interwoven Rollups are application-specific rollups built on top of Initia Layer 1. They are connected to the L1 through the OPinit Bridge, which enables seamless communication between the two layers via two Cosmos SDK modules: the OPhost Module, integrated into Initia L1, and the OPchild Module, embedded in each rollup. The OPhost Module manages rollup state, including sequencer information, and is responsible for creating, updating, and finalizing bridges and L2 output proposals. On the rollup side, the OPchild Module handles operator management, executes messages from Initia L1, and processes oracle price updates. Coordination between the two modules is handled by the OPinit Bot Executor, which ensures efficient and secure cross-layer operations. Unlike traditional Rollups, Interwoven Rollups can operate with anywhere from 1 to 100 sequencers. The Initia team recommends 1 to 5 for best performance, with the system capable of supporting up to 10,000 transactions per second with low latency.

Each rollup on Initia uses the Cosmos SDK for its application layer, but modules like staking and governance are usually excluded to streamline development. Governance is instead handled through smart contracts, removing the need for a native token. Each rollup supports fraud proofs: if a sequencer acts maliciously, validators can use Celestia data to confirm the issue and fork the chain if necessary.

One rollup on Initia can choose a different VM and has the choice between MoveVM, WasmVM, and EVM. This gives developers flexibility to choose the best stack for their use case. The LayerZero module is also implemented as a Cosmos SDK module instead of a Move smart contract, because relying solely on Move would limit compatibility. By embedding LayerZero messaging at the Cosmos SDK level, Initia ensures that all Rollups, regardless of their VM, support messaging from the moment they launch. This design enables smooth communication between Initia Layer 1, Rollups, and external chains.

Beyond its architecture, Initia simplifies go-to-market for Rollups by providing shared infrastructure like bridging, oracles, stablecoins, and wallet integrations. This reduces the operational load and helps Interwoven Rollups launch faster.

As previously mentioned, Initia provides native bridging support through the LayerZero module, which is integrated directly into every rollup. This enables seamless, out-of-the-box interoperability with all other LayerZero-enabled chains, including other Interwoven Rollups.

In parallel, IBC is also supported, allowing Cosmos-style communication and token transfers between chains, including Interwoven Rollups. However, there are key differences in how these systems operate. While LayerZero can be implemented directly across all Interwoven Rollups with no structural limitations, IBC has some trade-offs. Direct IBC connections between every Initia’s rollup would create complex dependencies that don’t scale well. In contrast, LayerZero supports omnichain fungible tokens, allowing tokens to be burned on one chain and minted on another for a smooth, unified experience.

To improve IBC usability, Initia uses its Layer 1 as a routing hub. When tokens are bridged in, they first arrive on the Initia Layer 1, which then sends them to the destination rollup. This approach ensures consistent token origin and avoids unnecessary wrapping. For example, moving a token from rollup-1 to rollup-2 goes through Layer 1, preventing duplication and maintaining liquidity. This is made possible by custom hooks on Layer 1 that can interact with contracts across all Rollups.

One of the key challenges with Ethereum Layer 2s is the poor user experience when withdrawing assets back to mainnet, which often involves a 7-day delay due to optimistic rollup security assumptions. Initia solves this with Minitswap, a mechanism that enables near-instant transfers between Rollups and the Initia Layer 1, typically completed in seconds.

While Initia’s OP bridge allows fast deposits from L1 to one rollup, withdrawals would normally face the same 7-day delay. IBC offers fast transfers in both directions, but without a withdrawal delay, it introduces security risks, especially if a rollup uses a single sequencer: a malicious sequencer could double-spend and instantly bridge assets out via IBC.

Minitswap avoids this by using a liquidity pool model. Instead of bridging, users swap tokens between L1 and rollup versions, enabling instant transfers. Pools consist of 50% INIT and are restricted to whitelisted Rollups.

To ensure safety and efficiency, the system includes:

Minitswap pools will be incentivized. Liquidity providers receive LP tokens that can be staked for rewards, integrating with Initia’s broader staking system.

The Initia Layer 1 uses Connect from Skip, a built-in oracle system powered by the Initia validator set. Validators fetch real-time price data from sources like CoinGecko and Binance, then reach consensus on these prices as part of block production. This data is embedded directly into each block and made natively available on-chain.

Developers on Initia can access reliable price feeds without needing external oracles. The same pricing data is also relayed to all Rollups, using the same structure as on Layer 1 and updated every 2 seconds. This setup is ideal for high-frequency applications like lending, trading, or derivatives, providing fast and consistent price data across the entire ecosystem.

Noble is a Cosmos based chain that offers native USDC with CCTP support. This creates a nice on-ramp for Initia. Users can send USDC from Ethereum or Solana to Noble, then transfer it via IBC to Initia Layer 1 and on to any rollup. The full process can be completed with a single transaction signed on the source chain, and this can be done using IBC Eureka.

The Vested Interest Program (VIP) is Initia’s incentive system designed to align rollup teams, users, and operators through long-term participation. It distributes INIT token rewards over time based on activity and commitment.

To qualify, Rollups must be approved by Initia Layer 1 governance. Once approved, rewards are based on factors like INIT locked and overall performance, and are shared with users and operators for actions like trading and providing liquidity.

The system is composed of two reward pools:

After calculating rewards for each rollup, they are further distributed to users according to a scoring system created by the rollup team.

Source: docs.initia.xyz

Rewards are issued as escrowed INIT tokens (esINIT), which are initially non-transferable. Participants can either convert these tokens into regular INIT tokens through a vesting process, which requires meeting certain performance criteria, or lock them in a staking position. This approach incentivizes meaningful contributions and ensures sustained engagement in the network’s growth and success.

Users can unlock $esINIT based on continued engagement. For example, if a user earns $1,000 in $esINIT in Epoch 1 (a two-week cycle) through activity such as trading, they must maintain similar engagement in Epoch 2 to:

Boosting efficiency with Enshrined Liquidity, users can also use their $esINIT into Enshrined Liquidity, which:

This approach makes reward distribution highly efficient and helps each rollup incentivize the exact behavior it values, while maintaining alignment with the overall Initia economy.

On Initia L1, block rewards are distributed to stakers who have staked INIT or whitelisted LP tokens with validators. However, not all staked tokens receive rewards equally, each token has a reward weight, which determines how much of the total block rewards it gets.

It works as follows:

Let’s say there are three whitelisted staking options:

Total Reward Weights = 50 + 30 + 20 = 100

If the total block rewards per block = 1000 INIT, then:

Rewards are proportionally split based on the assigned weights.

MilkyWay - a modular staking portal, from liquid staking to restaking marketplace and beyond.

Rena - the first TEE abstraction middleware supercharging verifiable AI use cases.

Echelon - a debt-driven Move app chain built on Initia. Echelon Chain is purpose built to be the debt engine of the interwoven modular economy.

Kamigotchi - onchain pet-idle rpg, by @asph0d37. On-chain game in the modular space

Contro - the chain for ultrafair DEXes powered by revolutionary GLOB p2p markets.

Blackwing - a modular blockchain that enables liquidation-free leverage trading for long-tail assets via a novel construct called Limitless Pools.

Rave - RAVE’s quanto perpetuals redefine not only perpetuals, but also tokens themselves by providing composable DeFi instruments.

Infinity Ground - revolutionizing AI entertainment with advanced models for mini-games, memes, and interactive stories.

Inertia - Inertia presents a new consolidated DeFi platform that integrates various assets from fragmented mainnets into one platform.

Civita - the first on-chain gamified social experiment for global domination.

Intergaze by Stargaze - the launchpad and marketplace for interwoven NFTs.

Battle for Blockchain - on-chain strategy game, set in the world of culinaris.

Lunch - the all-in-one finance app, focused on bridging Web2 to Web3.

Embr - igniting everlasting memes.

Zaar - the rollup where degeneracy comes to play.

Lazy Chain - Celestine Sloths Chain

Cabal - convex-like product built around Initia VIP

Owning both the Layer 1 and rollup systems under a unified architecture allows Initia to make coordinated trade-offs that are not possible in ecosystems like Ethereum’s rollup landscape. On Ethereum, independent and competing entities each bring their own infrastructure, incentives, and priorities. This fragmentation limits the ecosystem’s ability to fully realize network effects. As a result, many Rollups end up being parasitic, capturing value without meaningfully contributing to the base layer or broader ecosystem.

Initia takes a unified approach. All components, including Layer 1, data availability, messaging, and virtual machines, operate on a shared foundation. This alignment allows the INIT economy to grow as a whole rather than in isolated parts.

Initia works like a federal system. Rollups function as independent states with room to innovate, while Initia L1 provides shared infrastructure such as monetary policy (VIP), bridging, and governance. The system is designed so that everyone has a vested interest in the growth of Initia as a whole.

Bitcoin has firmly established itself as digital gold, the apex store of value in the cryptocurrency ecosystem. Adoption has reached Wall Street, banks are expanding their crypto services and offering direct BTC exposure via ETFs. With this level of institutional integration, the next pressing question becomes: how to generate yield on BTC holdings? Making things more interesting, institutions will focus on solutions that optimize for Security, Yield and Liquidity.

This poses a fundamental challenge for any Bitcoin L2 solution (and staking): since Bitcoin lacks native yield (unless you run a miner), and serves primarily as a store of value, any yield generated in another asset faces selling pressure if the ultimate goal is to accumulate more BTC.

When Bitcoiners participate in any ecosystem – whether it's an L2, DeFi protocol, or alternative chain – their end goal remains simple: stack more sats. This creates inherent selling pressure for any token used to pay staking rewards or security budgets. While teams are developing interesting utility for alternative tokens, the reality is that without a thriving ecosystem, sustainable yield remains a pipedream.. Teams are mainly forced to bootstrap network effects via points or other incentives.

This brings us to a critical point: Bitcoin L2s' main competition isn't other Bitcoin L2s or BTCfi, but established ecosystems like Solana and Ethereum. The sustainability of yield within a Bitcoin L2 cannot be achieved until a sufficiently robust ecosystem exists within that L2 – and this remains the central challenge. Interesting new ZK rollup providers like Alpen Labs and Starknet claim they can import network effects by offering EVM compatibility on Bitcoin while enhancing security. With Bitcoin’s building tenure as a store of value, increasingly like Gold, monetisation schemes for the asset will become more common.

However, we need to face reality – with 86% of VC funding for these L2s allocated post-2024, we're still years away from maturity. Is it too late for Bitcoin L2s to catch up?

Security alone is no longer a sufficient differentiator. Solana and Ethereum have proven resilient enough to earn institutional trust, while Bitcoin L2s must justify their additional complexity, particularly around smart contract risk when interacting with UTXOs.

Being EVM-compatible does not automatically create network effects. It might help bring developers / dapps over, but creating a winning ecosystem flywheel will only become tougher with time. In fact, the winners of this cycle have differentiated with a product first approach (Hyperliquid, Pumpdotfun, Ethena…), not VM or tech. As such, providing extra BTC economic security or alignment won’t be enough without a killer product in the long run.

Incremental security improvements alone aren't the most compelling selling point – we've seen re-staking initiatives like Eigenlayer struggle with this exact issue. AVS aren't generally willing to pay extra for security (especially since they’ve had it for free); selling security is hard. We’ve seen the same promise of cryptoeconomic security fail before with Cosmos ICS and Polkadot Parachains.

That said, Bitcoin L2s do have a compelling security advantage. They inherit Bitcoin's massive $1.2T+ security budget (hashrate), far exceeding what Solana or Ethereum can offer. For institutions prioritizing safety over yield size, this edge might matter – even if yields are somewhat lower. Bitcoin Timestamping could create a completely new market. Can L2s tap into this extra economic security and liquidity while 10x’ing product experience? Again, if your security is higher but the product is not great, it won’t matter.

BTC whales aren't primarily interested in bridging assets; they want to accumulate more Bitcoin. This raises an important question: from their perspective, is there a meaningful difference between locking BTC in an L2 versus in Solana?

Perceived risk is the key factor here. An institution might actually prefer Coinbase custody over a decentralized signer set where they might not know the operators, weighing legal risk against technical risk. This perception is heavily influenced by user experience – if a product isn't intuitive, the risk is perceived as higher. A degen whale on the other hand, might be comfortable with bridging into Solayer to farm the airdrop or with ‘staking’ into Bitlayer for yield.

At Chorus One we’ve classified every staking offering to better inform our institutional clients who are interested in putting their BTC to work, following the guidance of our friends at Bitcoin Layers.

Want to dive deeper into the staking offerings available through Bitcoin Layers? Shoot our analyst Luis Nuñez (and author of this paper) a DM on X!

Since risk is perceived and depending on your yield, security and liquidity preferences, your ideal option might look like this:

And still be super convenient. We’re in an interesting period where Bitcoin TVL or BTCfi is increasing dramatically (led by Babylon), while the % of BTC that has remained idle for at least 1 year keeps rising, now at 60%. This tells us that Bitcoin dominance is growing thanks to institutional adoption, but that there’s no compelling yield solutions yet to activate the BTC.

Institutions have historically preferred lending BTC over exploring L2/DeFi solutions, primarily due to familiarity (Coinbase, Cantor). According to Binance, only 0.79% of BTC is locked in DeFi, meaning that DeFi lending (e.g. Aave) is not as popular. Even so, wrapped BTC in DeFi is still around 5 times larger than the amount of BTC in staking protocols.

Staking in Bitcoin Layers requires significant education. L2s like Stacks and CoreDAO use the proximity to miners to secure the system and tap into the liquidity by providing incentives for contribution or merge mining. More TradFi akin operations might be an interesting differentiator for a BTC L2. We've seen significant institutional engagement in basis trades in the past, earning up to 5% yield with Deribit and other brokers.

However, lending's reputation has suffered severely post-2022. The collapses of BlockFi, Celsius, and Voyager exposed substantial custodial and counterparty risks, damaging institutional trust. As mentioned, Bitcoin L2s like Stacks offer an alternative by avoiding traditional custody while including other parties like Miners to have a role in providing yield via staking. For those with a more passive appetite, staking can be the ideal solution to yield. Today however, staking solutions are early and offer just points with the promise of a future airdrop, with the exception of CoreDAO.

Staking in Bitcoin L2s is very different. Typically, we see a multi-sig of operators that order L2 transactions and timestamp a hashed representation of the block into Bitcoin. This allows for state recreation of the L2 at any point in time if the L2 is compromised. Essentially, these use Bitcoin for DA (Data Availability). This means that consensus is still dependent on the multi-sig operators, so these could still collude. Innovations with ZK (Alpen Labs, Citrea), UTXO-to-Smart Contract (Arch, Stacks) and BitVM (BoB) are all trying to improve these security guarantees.

In Ethereum, leading L2s typically have a single sequencer (vs. a multi-sig) to settle transactions to the L1. Critically however, Ethereum L1 has the capability to do fraud proofs allowing for block reorgs if there's a malicious transaction. In Bitcoin, the L1 doesn’t have verification capabilities, so this is not possible… until BitVM?

BitVM aims to allow fraud proofs on the Bitcoin L1. BitVM potentially offers a 10x improvement in security for Bitcoin L2s, but it comes with significant operational challenges.

BitVM is a magnificent project where leaders from every ecosystem are collaborating to make it a reality. We’ve seen potentially drastic improvements between BitVM1 and BitVM2:

BitVM allows fraud proofs to happen through a sequence of standard Bitcoin transactions with carefully crafted scripts. At its core, verification in BitVM works because:

1. Program Decomposition

Before any transactions occur, the program to be verified (like a SNARK verifier) is split into sub-programs that fit in a btc block:

2. Operator Claim

The operator executes the entire program off-chain and claims:

They commit to all these values using cryptographic commitments in their on-chain transactions.

3. Challenge Initiation

When a challenger believes the operator is lying:

4. The Critical On-Chain Execution

Here's where Bitcoin nodes perform the actual verification:

The challenger creates a "Disprove" transaction that:

5. Bitcoin Consensus in Action

When nodes process this transaction:

The Bitcoin network reaches consensus on this result just like it does with any transaction's validity. The technology enables Bitcoin-native verification of arbitrary computations without changing Bitcoin's consensus rules. This opens the door for more sophisticated smart contracts secured directly by Bitcoin, but implementation hurdles are substantial since operators need to front the liquidity and face several risks:

As such, incentives to operate the bridge will be quite attractive to mitigate the risks. If we’re able to mitigate these, security will be significantly enhanced and might even provide interoperability between different layers, which could unlock interesting use-cases while retaining the Bitcoin proximity. Will this proximity allow for the creation of killer products and real yields?

For a Bitcoin L2 to succeed, it must offer products unavailable elsewhere or provide substantially better user experiences. The previously mentioned Bitcoin proximity has to be exploited for differentiation.

The jury is still out on whether ZK rollup initiatives can bootstrap meaningful network effects. These rollups will ultimately need a killer app to thrive or to port them from EVM with the promise of Bitcoin liquidity. Otherwise, why would dapps choose to settle on Bitcoin?

The winning strategy for Bitcoin L2s involves:

Below, we’ll dive into some of my top institutional picks, a few of which we’ve invested in.

Babylon’s main value-add is to provide Bitcoin economic security. As we’ve mentioned several times, this offering alone will not be enough, and the team is well aware. Personally, I'm bullish on the app-chain approach, following models like Avalanche or Cosmos, but simply using BTC for the initial bootstrap of security and liquidity.

While the app-chain thesis represents the endgame, reaching network effects requires 10x the effort since everything is naturally fragmented. Success demands an extremely robust supporting framework – something only Cosmos has arguably achieved with sufficient decentralization (and suffered its consequences). Avalanche provides the centralized support needed to unify a fragmented ecosystem.

The ideal endgame resembles apps in the App Store – distinct from each other but with clear commonalities. In this analogy, Bitcoin serves as the iPhone – the trusted foundation for distribution.

Mezo (investor)

Mezo's approach with mUSD is particularly interesting as it reduces token selling pressure if mUSD gains significant utility. Their focus on "real world" applications could drive mainstream adoption, with Bitcoin-backed loans as the centerpiece. Offering fixed rates as low as 1% unlocks interesting DeFi use cases around looping with reduced risk, while undercutting costs compared to Coinbase + Morpho BTC lending offerings (at around 5%).

Plasma (investor)

Purpose built for stablecoin usage. Zero-fee USDT transfers, parallel execution and strong distribution strategies position Plasma well in the ecosystem. Other features include confidential transactions and high customization around gas and fees.

Arch is following the MegaEth approach to curate a mafia ecosystem, a parallel execution environment, and close ties to Solana. In Arch, Users send assets directly to smart contracts using native Bitcoin transactions.

Stacks has a very interesting setup since there's no selling pressure for stakers (they earn BTC rather than STX). As the oldest and most recognized Bitcoin L2 brand, they have significant advantages. While Clarity presents challenges, this may be changing with innovations like smart contract to Bitcoin transaction capabilities in development and other programming languages. StackingDAO (investor), is the leading LST in the ecosystem and provides interesting yield opportunities in both liquid STX and liquid sBTC.

Looking to stake your STX? Click here!

BOB (Building on Bitcoin)

BoB is at the forefront of BitVM development (target mainnet in 2025) and is looking to use Babylon for security bootstrapping. The team is doing a fantastic job at exploiting the BTC proximity with BitVM while developing institutional grade products.

CoreDAO features strong LST adoption tailored for institutions and is the only staking yield mechanism that's live and returns actual $. CoreDAO Ventures is doing a great job at backing teams early in their development.

Botanix is the leading multi-sig set up with their Spiderchain, where each BTC that is being bridged by the chain is operated by a new and randomized multi-sig, increasing its robustness by providing ‘forward security’. Interestingly, Botanix will not have their own token (at least initially) and will only use BTC and pBTC, meaning rewards and fees will be in BTC.

For retail users, four standout solutions I like:

Bitcoin L2s face significant challenges in their quest for adoption and sustainability. The inherent tension between Bitcoin's store-of-value proposition and the yield-generating mechanisms of L2s creates fundamental hurdles. However, projects that can offer unique capabilities, seamless user experiences, and compelling institutional cases have the potential to overcome these obstacles and carve out valuable niches in the expanding Bitcoin ecosystem.

The key to success lies not in merely replicating what Ethereum or Solana already offer, but in leveraging Bitcoin's unique strengths to create complementary solutions that expand the utility of the world's leading cryptocurrency without compromising its fundamental value proposition. Adoption is one killer product away.

Want to learn more about yield opportunities on Bitcoin? Reach out to us at research@chorus.one and let’s chat!

In the world of blockchain technology, where every millisecond counts, the speed of light isn’t just a scientific constant—it’s a hard limit that defines the boundaries of performance. As Kevin Bowers highlighted in his article Jump Vs. the Speed of Light, the ultimate bottleneck for globally distributed systems, like those used in trading and blockchain, is the physical constraint of how fast information can travel.

To put this into perspective, light travels at approximately 299,792 km/s in a vacuum, but in fiber optic cables (the backbone of internet communication), it slows to about 200,000 km/s due to the medium's refractive index. This might sound fast, but when you consider the distances involved in a global network, delays become significant. For example:

For applications like high-frequency trading or blockchain consensus mechanisms, this delay is simply too long. In decentralized systems, the problem worsens because nodes must exchange multiple messages to reach agreement (e.g., propagating a block and confirming it). Each round-trip adds to the latency, making the speed of light a "frustrating constraint" when near-instant coordination is the goal.

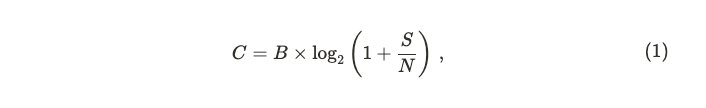

Beyond the physical delay imposed by the speed of light, blockchain networks face an additional challenge rooted in information theory: the Shannon Capacity Theorem. This theorem defines the maximum rate at which data can be reliably transmitted over a communication channel. It’s expressed as:

where C is the channel capacity (bits per second), B is the bandwidth (in hertz), and S/N is the signal-to-noise ratio. In simpler terms, the theorem tells us that even with a perfect, lightspeed connection, there’s a ceiling on how much data a network can handle, determined by its bandwidth and the quality of the signal.

For blockchain systems, this is a critical limitation because they rely on broadcasting large volumes of transaction data to many nodes simultaneously. So, even if we could magically eliminate latency, the Shannon Capacity Theorem reminds us that the network’s ability to move data is still finite. For blockchains aiming for mass adoption—like Solana, which targets thousands of transactions per second—this dual constraint of light speed and channel capacity is a formidable hurdle.

In a computing landscape where recent technological advances have prioritized fitting more cores into a CPU rather than making them faster, and where the speed of light emerges as the ultimate bottleneck, Jump team refuses to settle for off-the-shelf solutions or the short-term fix of buying more hardware. Instead, it reimagines existing solutions to extract maximum performance from the network layer, optimizing data transmission, reducing latency, and enhancing reliability to combat the "noise" of packet loss, congestion, and global delays.

The Firedancer project is about tailoring this concept for a blockchain world where every microsecond matters, breaking the paralysis in decision-making that arises when systems have many unoptimized components.

Firedancer is a high-performance validator client developed in C for the Solana blockchain, developed by Jump Crypto, a division of Jump Trading focused on advancing blockchain technologies. Unlike traditional validator clients that rely on generic software stacks and incremental hardware upgrades, Firedancer is a ground-up reengineering of how a blockchain node operates. Its mission is to push the Solana network to the very limits of what’s physically possible, addressing the dual constraints of light speed and channel capacity head-on.

At its core, Firedancer is designed to optimize every layer of the system, from data transmission to transaction processing. It proposes a major rewrite of the three functional components of the Agave client: networking, runtime, and consensus mechanism.

Firedancer is a big project, and for this reason it is being developed incrementally. The first Firedancer validator is nicknamed Frankendancer. It is Firedancer’s networking layer grafted onto the Agave runtime and consensus code. Precisely, Frankendancer has implemented the following parts:

All other functionality is retained by Agave, including the runtime itself which tracks account state and executes transactions.

In this article, we’ll dive into on-chain data to compare the performance of the Agave client with Frankendancer. Through data-driven analysis, we quantify if these advancements can be seen on-chain via Solana’s performance. This means that not all improvements will be visible via this analysis.

You can walk through all the data used in this analysis via our dedicated dashboard.

While signature verification and block distribution engines are difficult to track using on-chain data, studying the dynamical behaviour of transactions can provide useful information about QUIC implementation and block packing logic.

Transactions on Solana are encoded and sent in QUIC streams into validators from clients, cfr. here. QUIC is relevant during the FetchStage, where incoming packets are batched (up to 128 per batch) and prepared for further processing. It operates at the kernel level, ensuring efficient network input handling. This makes QUIC a relevant piece of the Transaction Processing Unit (TPU) on Solana, which represents the logic of the validator responsible for block production. Improving QUIC means ultimately having control on transaction propagation. In this section we are going to compare the Agave QUIC implementation with the Frankendancer fd_quic—the C implementation of QUIC by Jump Crypto.

The first difference relies on connection management. Agave utilizes a connection cache to manage connections, implemented via the solana_connection_cache module, meaning there is a lookup mechanism for reusing or tracking existing connections. It also employs an AsyncTaskSemaphore to limit the number of asynchronous tasks (set to a maximum of 2000 tasks by default). This semaphore ensures that the system does not spawn excessive tasks, providing a basic form of concurrency control.

Frankendancer implements a more explicit and granular connection management system using a free list (state->free_conn_list) and a connection map (fd_quic_conn_map) based on connection IDs. This allows precise tracking and allocation of connection resources. It also leverages receive-side scaling and kernel bypass technologies like XDP/AF_XDP to distribute incoming traffic across CPU cores with minimal overhead, enhancing scalability and performance, cfr. here. It does not rely on semaphores for task limiting; instead, it uses a service queue (svc_queue) with scheduling logic (fd_quic_svc_schedule) to manage connection lifecycle events, indicating a more sophisticated event-driven approach.

Frankendancer also implements a stream handling pipeline. Precisely, fd_quic provides explicit stream management with functions like fd_quic_conn_new_stream() for creation, fd_quic_stream_send() for sending data, and fd_quic_tx_stream_free() for cleanup. Streams are tracked using a fd_quic_stream_map indexed by stream IDs.

Finally, for packet processing, Agave approach focuses on basic packet sending and receiving, with asynchronous methods like send_data_async() and send_data_batch_async().

Frankendancer implements detailed packet processing with specific handlers for different packet types: fd_quic_handle_v1_initial(), fd_quic_handle_v1_handshake(), fd_quic_handle_v1_retry(), and fd_quic_handle_v1_one_rtt(). These functions parse and process packets according to their QUIC protocol roles.

Differences in QUIC implementation can be seen on-chain at transactions level. Indeed, a more "sophisticated" version of QUIC means better handling of packets and ultimately more availability for optimization when sending them to the block packing logic.

After the FetchStage and the SigVerifyStage—which verifies the cryptographic signatures of transactions to ensure they are valid and authorized—there is the Banking stage. Here verified transactions are processed.

At the core of the Banking stage is the scheduler. It represents a critical component of any validator client, as it determines the order and priority of transaction processing for block producers.

Agave implements a central scheduler introduced in v2.18. Its main purpose is to loop and constantly check the incoming queue of transactions and process them as they arrive, routing them to an appropriate thread for further processing. It prioritizes transaction accordingly to

The scheduler is responsible for pulling transactions from the receiver channel, and sending them to the appropriate worker thread based on priority and conflict resolution. The scheduler maintains a view of which account locks are in-use by which threads, and is able to determine which threads a transaction can be queued on. Each worker thread will process batches of transactions, in the received order, and send a message back to the scheduler upon completion of each batch. These messages back to the scheduler allow the scheduler to update its view of the locks, and thus determine which future transactions can be scheduled, cfr. here.

Frankendancer implements its own scheduler in fd_pack. Within fd_pack, transactions are prioritized based on their reward-to-compute ratio—calculated as fees (in lamports) divided by estimated CUs—favoring those offering higher rewards per resource consumed. This prioritization happens within treaps, a blend of binary search trees and heaps, providing O(log n) access to the highest-priority transactions. Three treaps—pending (regular transactions), pending_votes (votes), and pending_bundles (bundled transactions)—segregate types, with votes balanced via reserved capacity and bundles ordered using a mathematical encoding of rewards to enforce FIFO sequencing without altering the treap’s comparison logic.

Scheduling, driven by fd_pack_schedule_next_microblock, pulls transactions from these treaps to build microblocks for banking tiles, respecting limits on CUs, bytes, and microblock counts. It ensures votes get fair representation while filling remaining space with high-priority non-votes, tracking usage via cumulative_block_cost and data_bytes_consumed.

To resolve conflicts, it uses bitsets—a container that represents a fixed-size sequence of bits—which are like quick-reference maps. Bitsets—rw_bitset (read/write) and w_bitset (write-only)—map account usage to bits, enabling O(1) intersection checks against global bitset_rw_in_use and bitset_w_in_use. Overlaps signal conflicts (e.g., write-write or read-write clashes), skipping the transaction. For heavily contested accounts (exceeding PENALTY_TREAP_THRESHOLD of 64 references), fd_pack diverts transactions to penalty treaps, delaying them until the account frees up, then promoting the best candidate back to pending upon microblock completion. A slow-path check via acct_in_use—a map of account locks per bank tile—ensures precision when bitsets flag potential issues.

Vote fees on Solana are a vital economic element of its consensus mechanism, ensuring network security and encouraging validator participation. In Solana’s delegated Proof of Stake (dPoS) system, each active validator submits one vote transaction per slot to confirm the leader’s proposed block, with an optimal delay of one slot. Delays, however, can shift votes into subsequent slots, causing the number of vote transactions per slot to exceed the active validator count. Under the current implementation, vote transactions compete with regular transactions for Compute Unit (CU) allocation within a block, influencing resource distribution.

Data reveals that the Frankendancer client includes more vote transactions than the Agave client, resulting in greater CU allocation to votes. To evaluate this difference, a dynamic Kolmogorov-Smirnov (KS) test can be applied. This non-parametric test compares two distributions by calculating the maximum difference between their Cumulative Distribution Functions (CDFs), assessing whether they originate from the same population. Unlike parametric tests with specific distributional assumptions, the KS-test’s flexibility suits diverse datasets, making it ideal for detecting behavioral shifts in dynamic systems. The test yields a p-value, where a low value (less than 0.05) indicates a significant difference between distributions.

When comparing CU usage for non-vote transactions between Agave (Version 2.1.14) and Frankendancer (Version 0.406.20113), the KS-test shows that Agave’s CDF frequently lies below Frankendancer’s (visualized as blue dots). This suggests that Agave blocks tend to allocate more CUs to non-vote transactions compared to Frankendancer. Specifically, the probability of observing a block with lower CU usage for non-votes is higher in Frankendancer relative to Agave.

Interestingly, this does not correspond to a lower overall count of non-vote transactions; Frankendancer appears to outperform Agave in including non-vote transactions as well. Together, these findings imply that Frankendancer validators achieve higher rewards, driven by increased vote transaction inclusion and efficient CU utilization for non-vote transactions.

Why Frankendancer is able to process more vote transactions may be due to the fact that on Agave there is a maximum number of QUIC connections that can be established between a client (identified by IP Address and Node Pubkey) and the server, ensuring network stability. The number of streams a client can open per connection is directly tied to their stake. Higher-stake validators can open more streams, allowing them to process more transactions concurrently, cfr. here. During high network load, lower-stake validators might face throttling, potentially missing vote opportunities, while higher-stake validators, with better bandwidth, can maintain consistent voting, indirectly affecting their influence in consensus. Frankendancer doesn't seem to suffer from the same restriction.

Although inclusion of vote transactions plays a relevant role in Solana consensus, there are other two metrics that are worth exploring: Skip Rate and Validator Uptime.

Skip Rate determines the availability of a validator to correctly propose a block when selected as leader. Having a high skip rate means less total rewards, mainly due to missed MEV and Priority Fee opportunities. However, missing a high number of slots also reduces total TPS, worsening final UX.

Validator Uptime impacts vote latency and consequently final staking rewards. This metric is estimated via Timely Vote Credit (TVC), which indirectly measures the distance a validator takes to land its votes. A 100% effectiveness on TVC means that validators land their votes in less than 2 slots.

As we can see, there are no main differences pre epoch 755. Data shows a recent elevated Skip Rate for Frankendancer and a corresponding low TVC effectiveness. However, it is worth noting that, since these metrics are based on averages, and considering a smaller stake is running Frankendancer, small fluctuations in Frankendancer performances need more time to be reabsorbed.

The scheduler plays a critical role in optimizing transaction processing during block production. Its primary task is to balance transaction prioritization—based on priority fees and compute units—with conflict resolution, ensuring that transactions modifying the same account are processed without inconsistencies. The scheduler orders transactions by priority, then groups them into conflict-free batches for parallel execution by worker threads, aiming to maximize throughput while maintaining state coherence. This balancing act often results in deviations from the ideal priority order due to conflicts.

To evaluate this efficiency, we introduced a dissipation metric, D, that quantifies the distance between a transaction’s optimal position o(i)—based on priority and dependent on the scheduler— and its actual position in the block a(i), defined as

where N is the number of transactions in the considered block.

This metric reveals how well the scheduler adheres to the priority order amidst conflict constraints. A lower dissipation score indicates better alignment with the ideal order. It is clear that the dissipation D has an intrinsic factor that accounts for accounts congestion, and for the time-dependency of transactions arrival. In an ideal case, these factors should be equal for all schedulers.

Given the intrinsic nature of the dissipation, the numerical value of this estimator doesn't carry much relevance. However, when comparing the results for two types of scheduler we can gather information on which one resolves better conflicts. Indeed, a higher value of the dissipation estimator indicates a preference towards conflict resolutions rather than transaction prioritization.

Comparing Frankendancer and Agave schedulers highlights how dissipation is higher for Frankendancer, independently from the version. This is more clear when showing the dynamical KS test. Only for very few instances the Agave scheduler showed a higher dissipation with statistically significant evidence.

If the resolution of conflicts—and then parallelization—is due to the scheduler implementation or to QUIC implementation is hard to tell from these data. Indeed, a better resolution of conflicts can be achieved also by having more transactions to select from.

Finally, also by comparing the percentiles of Priority Fees for transactions we can see hints of a different conflict resolution from Frankendancer. Indeed, despite the overall number of transactions (both vote and non-vote) and extracted value being higher than Agave, the median of PF is lower.

In this article we provide a detailed comparison of the Agave and Frankendancer validator clients on the Solana blockchain, focusing on on-chain performance metrics to quantify their differences. Frankendancer, the initial iteration of Jump Crypto’s Firedancer project, integrates an advanced networking layer—including a high-performance QUIC implementation and kernel bypass—onto Agave’s runtime and consensus code. This hybrid approach aims to optimize transaction processing, and the data reveals its impact.

On-chain data shows Frankendancer includes more vote transactions per block than Agave, resulting in greater compute unit (CU) allocation to votes, a critical factor in Solana’s consensus mechanism. This efficiency ties to Frankendancer’s QUIC and scheduler enhancements. Its fd_quic implementation, with granular connection management and kernel bypass, processes packets more effectively than Agave’s simpler, semaphore-limited approach, enabling better transaction propagation.

The scheduler, fd_pack, prioritizes transactions by reward-to-compute ratio using treaps, contrasting Agave’s priority formula based on fees and compute requests. To quantify how well each scheduler adheres to ideal priority order amidst conflicts we developed a dissipation metric. Frankendancer’s higher dissipation, confirmed by KS-test significance, shows it prioritizes conflict resolution over strict prioritization, boosting parallel execution and throughput. This is further highlighted by Frankendancer’s median priority fees being lower.

A lower median for Priority Fees and higher extracted value indicates more efficient transaction processing. For validators and delegators, this translates to increased revenue. For users, it means a better overall experience. Additionally, more votes for validators and delegators lead to higher revenues from SOL issuance, while for users, this results in a more stable consensus.

The analysis, supported by the Flipside Crypto dashboard, underscores Frankendancer’s data-driven edge in transaction processing, CU efficiency, and reward potential.